AWS Data Engineer Resume Examples

Jul 18, 2024

|

12 min read

"Crafting your AWS data engineer resume: a step-by-step guide to creating a 'cloud nine' application that stands out in the tech industry."

Rated by 348 people

AWS Big Data Engineer

AWS Data Pipeline Engineer

AWS Cloud Data Architect

AWS Machine Learning Data Engineer

AWS Data Solutions Engineer

Senior AWS Data Infrastructure Engineer

AWS Data Analytics Engineer

AWS Data Operations Engineer

AWS Data Security Engineer

Certified AWS Data Systems Engineer

AWS Big Data Engineer resume sample

When applying for this role, it's important to highlight your experience with big data technologies like Hadoop, Spark, and AWS services such as Redshift or EMR. Showcase your understanding of data pipelines and ETL processes, as these are fundamental components. Include relevant certifications like AWS Certified Big Data – Specialty. Describe specific projects where you optimized data processes, using the 'skill-action-result' format, to illustrate your contributions. Demonstrating your ability to analyze large datasets and derive actionable insights will strengthen your application significantly.

- •Led the design and implementation of scalable data processing systems using Amazon EMR and Redshift, increasing data throughput by 50%.

- •Collaborated with data scientists and analysts to define data requirements, ensuring delivery of high-quality data solutions.

- •Developed ETL pipelines, optimizing data extraction and transformation processes, reducing latency by 30%.

- •Implemented enhanced data models, which improved query performance by 25%, supporting critical analytics operations.

- •Monitored and troubleshot data processing jobs, achieving a 99.9% system uptime rate and consistent performance.

- •Applied best security and governance practices within the AWS environment, leading to a 20% reduction in data breaches.

- •Built data processing frameworks using Apache Spark that reduced processing time by up to 45% for large datasets.

- •Collaborated with cross-functional teams to support data-driven decision making, increasing business process efficiency by 15%.

- •Designed ETL processes to integrate data from various sources, resulting in enhanced data quality and reliability.

- •Optimized data models for complex analytics tasks, leading to a significant improvement in retrieval performance.

- •Performed ongoing monitoring and improvements for data jobs, ensuring consistent quality and performance standards.

- •Implemented AWS-based solutions enhancing cloud data storage, reducing costs by 20% while maintaining data integrity.

- •Contributed to a team project applying machine learning frameworks, which improved predictive analytics capabilities.

- •Automated data processing workflows resulting in a 35% increase in speed and accuracy of data analytics operations.

- •Collaborated with business analysts to develop data solutions that addressed key business challenges.

- •Designed scalable ETL processes for integrating large datasets, enhancing data processing speeds by 40%.

- •Assisted in developing automated data pipelines, reducing manual intervention and increasing reliability.

- •Worked on a project to transition data systems to the cloud, reducing infrastructure costs by 30%.

- •Participated in team meetings and collaborated on data solutions, promoting best practices and efficiency.

AWS Data Pipeline Engineer resume sample

When applying for this position, it’s important to showcase your proficiency in data integration and ETL processes. Highlight any experience with tools like Apache Spark or AWS Glue. Mentioning relevant projects can illustrate your hands-on skills. Demonstrating familiarity with data warehousing concepts is also beneficial. Include any certifications, such as AWS Certified Data Analytics, to strengthen your profile. Use the 'skill-action-result' approach to detail specific situations where your interventions improved data flow or reduced processing times, demonstrating your impact on previous projects.

- •Designed and deployed an automated data pipeline using AWS Glue and Redshift that increased data processing efficiency by 40%, resulting in improved decision-making.

- •Optimized existing ETL processes to reduce runtime by 30% and decreased costs by implementing on-demand AWS Lambda functions.

- •Collaborated with data analysts to refine data models, ensuring 99% data quality and enhancing overall data-driven insights.

- •Implemented security protocols within AWS S3, minimizing data breach risks and safeguarding sensitive business information.

- •Managed a team of junior engineers, improving the team's productivity by 25% through mentorship and streamlined processes.

- •Stayed updated with emerging AWS services, recommending cost-effective solutions and resulting in a 15% budget reduction.

- •Spearheaded the creation of scalable data pipelines that processed datasets 10 times faster, contributing to enhanced product capabilities.

- •Integrated AWS Data Pipeline with on-premise data storage, enabling seamless hybrid data management and increasing data accessibility.

- •Led weekly stakeholder meetings, ensuring alignment and delivering data solutions that met business requirements and reduced operational bottlenecks.

- •Implemented robust data governance frameworks, achieving a 95% compliance rate with industry standards and best practices.

- •Utilized AWS Lambda for real-time data processing, increasing system responsiveness and reducing latency by 20%.

- •Designed and implemented ETL workflows using AWS Glue, resulting in 50% reduced data processing times for real-time analytics.

- •Automated data pipeline monitoring systems, reducing manual oversight by 70% and increasing reliability.

- •Customized SQL scripts to accommodate complex data queries, accelerating data retrieval processes by 35%.

- •Developed data migration strategies to AWS, ensuring 99% data integrity and minimal business disruption.

- •Executed data migration tasks to AWS Redshift, improving data storage efficiency and reducing costs by 15%.

- •Monitored data workflows, identifying and resolving 95% of data processing bottlenecks proactively.

- •Collaborated with cross-functional teams to implement data warehousing solutions, supporting business intelligence objectives.

- •Enhanced ETL processes, increasing data processing speed by 20% through optimized database queries.

AWS Cloud Data Architect resume sample

When applying for this role, it's vital to showcase your experience in designing scalable cloud architectures. Highlight projects where you implemented cost-effective solutions while ensuring performance and security. Certifications like AWS Solutions Architect or relevant frameworks can strengthen your application. Illustrate your ability to optimize databases and manage data migration processes. Use specific metrics to demonstrate the impact of your work, following a 'challenge-action-result' format, to show how your strategies improved efficiency and reduced costs for your previous employers.

- •Designed and implemented a scalable data architecture using AWS Redshift, reducing processing time by 30% and enabling complex analytics.

- •Led cross-functional teams to define and execute data strategies, resulting in a 25% increase in data quality compliance.

- •Developed ETL workflows with AWS Glue, integrating disparate data sources to streamline data warehousing operations by 20%.

- •Implemented data governance practices that enhanced data accuracy, contributing to a 40% reduction in data inconsistency errors.

- •Conducted performance tuning on large data sets in Amazon S3, optimizing queries and boosting system performance by 50%.

- •Provided mentorship to junior team members, facilitating upskilling and contributing to improved team productivity.

- •Architected data processing workflows on AWS, enhancing data accessibility and reducing ETL processing times by 35%.

- •Collaborated with stakeholders to develop efficient data models, supporting new analytics capabilities and improving business insights.

- •Implemented security protocols to protect sensitive data, resulting in zero data breaches during tenure.

- •Managed AWS infrastructure resources effectively, reducing operational costs by 15% through optimization strategies.

- •Kept the team updated on AWS service advancements, enabling proactive adoption and implementation saving $200,000 annually.

- •Developed and maintained ETL processes using AWS Lambda, improving data retrieval speed by 25% for analytics platforms.

- •Contributed to the design of a data lake architecture that supported machine learning models, enhancing predictive analytics capabilities.

- •Ensured the deployment of AWS RDS instances with high availability configurations, maintaining 99.9% uptime.

- •Provided technical guidance in SQL optimization, resulting in a 40% decrease in query processing time for complex reports.

- •Analyzed large datasets to uncover trends that informed strategic decisions, boosting sales by 20% over two quarters.

- •Developed dashboards with data visualization tools to provide clear insights and improve decision-making processes.

- •Standardized data reporting procedures across departments, significantly reducing reporting time and increasing reliability.

- •Enhanced data analysis capabilities by implementing advanced SQL querying techniques for complex data sets.

AWS Machine Learning Data Engineer resume sample

When applying for this role, it's essential to highlight any experience with machine learning frameworks or tools, such as TensorFlow or PyTorch. Showcase relevant projects that demonstrate your ability to build and deploy machine learning models. If you've taken courses or earned certifications in data science or machine learning, be sure to mention these, including the duration and key topics covered. Use specific examples to illustrate how your skills have driven data-driven decisions or improved processes in your previous roles, following a 'skill-action-result' format.

- •Led a team to develop scalable data pipelines on AWS, improving data processing speed by 30% and reducing costs by 15%.

- •Engineered high-performance ETL processes, leading to a 25% increase in reliability and efficiency of data extraction.

- •Collaborated with machine learning teams to design data architecture, optimizing the system for processing large datasets efficiently.

- •Developed and maintained robust data storage solutions using AWS S3 and Redshift, boosting data retrieval speed by 40%.

- •Ensured high data availability and security through effective AWS CloudWatch and IAM policy implementation.

- •Documented processes and data workflows for consistency and knowledge sharing across a cross-functional team of 15 engineers.

- •Designed machine learning pipelines that enhanced model performance by 20%, using AWS SageMaker and Glue.

- •Migrated legacy data systems to AWS Cloud, reducing operational costs by 25% and increasing data accessibility.

- •Optimized ETL workflows, achieving a 30% reduction in data processing time and increasing data throughput.

- •Implemented data quality measures resulting in a 50% decrease in erroneous data entries and improved accuracy.

- •Spearheaded initiatives for real-time data processing, improving system response time by 15%.

- •Developed data pipelines to support analytics platforms, resulting in a 25% increase in data processing capabilities.

- •Collaborated with cross-functional teams to define data requirements and integrate machine learning models into data workflows.

- •Managed data storage solutions, enhancing availability, and performance, with a 20% increase in data retrieval speeds.

- •Maintained ETL solutions and improved system monitoring, which led to a 30% improvement in data pipeline reliability.

- •Analyzed data trends to support strategic business decisions, increasing sales opportunities by 10%.

- •Developed automated reporting tools, leading to a 40% reduction in reporting time and improved data insights.

- •Supported data integration projects across diverse teams, fostering collaboration and unified data practices.

- •Monitored data quality and implemented improvements, resulting in a 30% reduction in data discrepancies.

AWS Data Solutions Engineer resume sample

When applying for this role, emphasize your technical skills related to cloud architecture and data management. Highlight any experience with tools like AWS Glue, Redshift, or S3, and your ability to design scalable data solutions. If you have completed certifications in relevant AWS technologies, be sure to mention those qualifications and the skills gained. Use specific examples to demonstrate how your solutions improved data accessibility or analysis in past projects, detailing the impact on decision-making or operational efficiency to strengthen your application.

- •Led a team to implement AWS Redshift-powered data warehouse, improving query performance by 40%, exceeding stakeholders' expectations.

- •Designed a secure data pipeline using AWS Glue and Lambda that reduced data processing time by 50%, optimizing operations.

- •Implemented cost-effective AWS S3 data storage solutions, cutting storage expenses by 25% while maintaining system reliability.

- •Collaborated with cross-functional teams to transform and migrate 10+ legacy databases to AWS DynamoDB, enhancing scalability.

- •Conducted regular code reviews, resulting in a 30% decrease in post-deployment errors, improving software reliability and uptime.

- •Maintained comprehensive documentation of data architectures, ensuring consistency and compliance with security regulations.

- •Designed efficient ETL processes using AWS EMR, reducing data ingestion time by 60% for critical business analytics.

- •Spearheaded projects that enhanced data visualization by integrating AWS QuickSight, resulting in 20% faster data-driven decisions.

- •Optimized data transformation processes with Python, achieving a 15% increase in processing speed, improving overall system efficiency.

- •Ensured compliance with data governance policies, establishing monitoring protocols to maintain a 99.8% data accuracy rate.

- •Engaged in technical workshops, improving team proficiency in AWS best practices, leading to a 20% enhancement in project delivery.

- •Developed and managed scalable AWS data solutions, resulting in 35% improved system capacity and storage utilization.

- •Collaborated with stakeholders to redesign critical data models, enhancing efficiency in data retrieval processes by 25%.

- •Conducted peer-reviewed technical presentations on data architecture improvements, promoting a 15% increase in team productivity.

- •Implemented secure data access controls, achieving compliance with international data protection standards, improving security.

- •Assisted in developing data solutions using AWS services, leading to a 20% increase in data processing efficiency.

- •Supported cross-functional teams in the deployment of AWS Lambda functions, optimizing resource allocation by 12%.

- •Contributed to the creation of detailed documentation, reducing onboarding time for new employees by 30%.

- •Participated in AWS knowledge-sharing sessions, fostering a collaborative environment that resulted in a 10% performance improvement.

Senior AWS Data Infrastructure Engineer resume sample

When applying for this position, emphasize your experience with AWS services such as S3, Redshift, and Glue. Highlight your proficiency in building data pipelines and ETL processes. Include any relevant certifications like AWS Certified Solutions Architect or AWS Certified Data Analytics. Showcase specific projects where you improved data quality or reduced processing time, using clear metrics to demonstrate the impact of your work. Also, touch on your ability to collaborate with cross-functional teams to design scalable data solutions that meet business needs.

- •Designed and implemented data pipelines that increased data processing efficiency by 30% using AWS Glue and Lambda.

- •Collaborated with cross-functional teams to establish best practices in data security and compliance, decreasing security threats by 25%.

- •Optimized data storage solutions on S3 and Redshift, reducing storage costs by 20% while improving data retrieval efficiency by 35%.

- •Led architecture discussions to enhance the data strategy, introducing cloud-native solutions that supported a 50% growth in data processing demands.

- •Mentored junior engineers, enhancing their understanding of AWS services, resulting in a 15% increase in team productivity.

- •Resolved complex data infrastructure issues, contributing to a 98% uptime over the year, ensuring seamless data analytics operations.

- •Enhanced data ingestion processes, reducing data latency by 45% by implementing efficient AWS Kinesis streaming solutions.

- •Implemented robust security measures, resulting in compliance with GDPR and other data protection regulations.

- •Improved data modeling practices, increasing data consistency and reliability, resulting in 50% reduction in data discrepancies.

- •Streamlined ETL processes by leveraging Terraform and AWS CloudFormation, enhancing deployment efficiency by 30%.

- •Collaborated with data scientists to implement machine learning solutions, supporting advanced analytical models.

- •Developed and managed ETL pipelines that improved data transfer speeds by 60% using AWS Redshift.

- •Integrated data encryption techniques that ensured data security, preventing unauthorized access incidents.

- •Architected cloud migrations, transitioning 70% of on-premise data to AWS, reducing infrastructure costs by 30%.

- •Implemented cost-efficient data storage solutions on DynamoDB, maintaining high performance and scalability.

- •Led cloud infrastructure design projects, enhancing data architecture efficiency and reducing system downtime by 40%.

- •Facilitated cross-departmental collaboration, integrating strategic solutions for smoother data transitions into AWS environments.

- •Introduced data governance frameworks that ensured compliance and enhanced data integrity.

- •Supervised data migration initiatives that transferred 80% of legacy data platforms to AWS, minimizing downtime.

AWS Data Analytics Engineer resume sample

When applying for this role, it’s essential to showcase any experience with data visualization tools such as Tableau or Power BI. Highlight your skills in SQL and data warehousing, as these are foundational for analytics work. Certifications in data analytics or cloud computing, like AWS Certified Data Analytics, will strengthen your application. Provide specific examples of how your analytical skills have driven key business decisions or improved processes, using a 'challenge-action-result' framework to illustrate your impact effectively.

- •Designed and implemented scalable data architectures resulting in a 30% reduction in query processing times.

- •Developed data ingestion pipelines using AWS Glue, improving data integration efficiency by 25%.

- •Collaborated with business teams to deliver insights via Amazon QuickSight, enhancing reports' clarity and decision-making processes.

- •Optimized ETL processes, improving data processing speed which contributed to saving 15 hours weekly in report generation.

- •Integrated machine learning algorithms for data analysis, increasing predictive analytics capabilities by 20%.

- •Trained team members on emerging AWS technologies, boosting team proficiency in cloud computing solutions.

- •Streamlined the data pipeline for ETL processes, resulting in a 40% increase in data processing efficiency.

- •Collaborated with cross-functional teams to deploy data warehouse solutions on AWS Redshift, supporting scalable analysis.

- •Led the development of a data governance framework ensuring data integrity and compliance, improving trust in data usage.

- •Implemented Power BI dashboards that provided real-time insights, increasing operational productivity by 15%.

- •Executed complex SQL queries for data analysis, contributing to an improved data retrieval system for business applications.

- •Developed business intelligence solutions using Tableau, leading to enhanced strategic decision-making capabilities.

- •Participated in the migration of data warehouse systems to cloud platforms, facilitating a seamless transition with no business disruption.

- •Designed key performance indicator (KPI) dashboards, enhancing executive stakeholders' ability to track company performance.

- •Assisted in developing scalable data models supporting extensive data analysis projects across various departments.

- •Analyzed large datasets for trends, providing actionable insights that reduced operational costs by 10%.

- •Supported migration projects from on-premise to AWS cloud, ensuring data integrity and accessibility.

- •Developed custom reports that improved strategic insights, supporting data-driven decision-making across teams.

- •Evaluated data models and refined processes, achieving a 20% improvement in data accuracy.

AWS Data Operations Engineer resume sample

When applying for a role focused on AWS data operations, emphasize your experience with data management and cloud solutions. Highlight any experience in using AWS services such as S3, EC2, or RDS. Include relevant certifications like AWS Certified Solutions Architect or Data Analytics to show your technical expertise. Provide specific examples of how you’ve optimized data workflows or improved system performance, using a 'skill-action-result' format. Mention your teamwork and problem-solving skills, as collaboration is key in operations roles to drive successful outcomes.

- •Designed and deployed scalable data solutions on AWS, directly contributing to a 30% reduction in data processing times.

- •Developed robust data pipelines on AWS, facilitating a seamless data flow and reliable access for analytics teams.

- •Monitored AWS resources to optimize performance, resulting in a 25% increase in data reliability and availability.

- •Collaborated with data scientists to understand data needs, delivering solutions that increased insight accuracy by 15%.

- •Implemented automated data quality checks, reducing data integrity issues by 40%.

- •Documented workflows comprehensively to ensure compliance with AWS best practices and knowledge sharing.

- •Engineered data solutions on cloud platforms, improving response times for analytics applications by 35%.

- •Managed and optimized SQL-based data models, increasing query efficiency by 28%.

- •Led a project to automate data ingestion processes, reducing manual intervention by 50%.

- •Worked closely with cross-functional teams to enhance data architecture, resulting in a 20% cost saving on data storage.

- •Documented data management processes to ensure system integrity and ease of maintenance.

- •Architected cloud solutions leveraging Azure services, leading to a 30% improvement in application scalability.

- •Implemented CI/CD pipelines for data operations, reducing deployment errors by 60%.

- •Developed data security protocols to safeguard data integrity, meeting all compliance standards.

- •Customized data solutions based on client needs, enhancing customer satisfaction scores by 15%.

- •Analyzed large datasets, providing actionable insights that increased business efficiency by 20%.

- •Automated data reporting processes, cutting reporting time by 40% and improving accuracy.

- •Collaborated with stakeholders to develop data-driven strategies, enhancing decision-making processes.

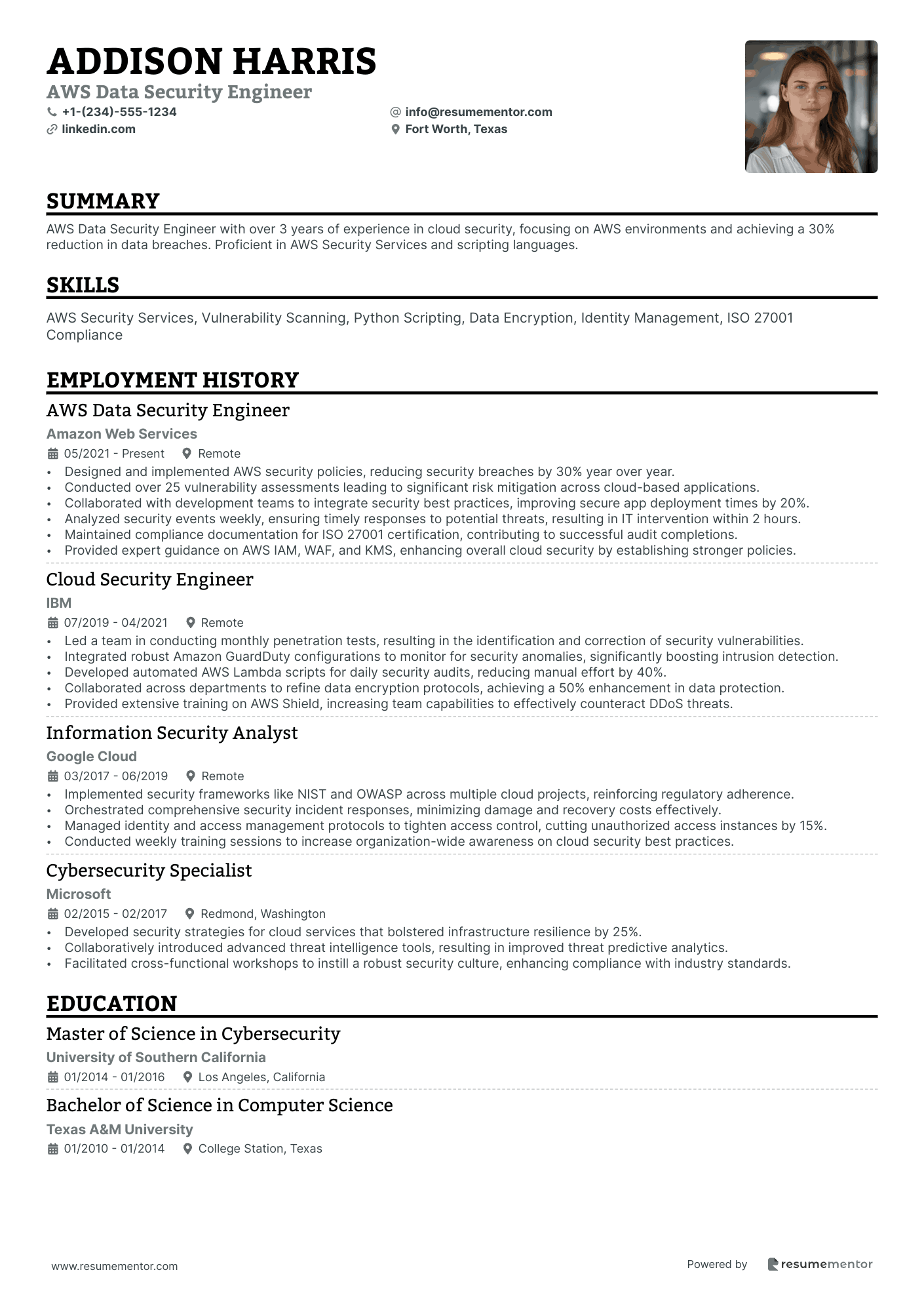

AWS Data Security Engineer resume sample

When applying for this role, it’s important to showcase any experience with cloud security best practices and compliance standards, such as ISO 27001 or GDPR. Mention any relevant certifications, like AWS Certified Security Specialty, to demonstrate your commitment to the field. Highlight your knowledge of risk assessment tools and security frameworks, and provide examples using the 'skill-action-result' approach. Additionally, include instances where your actions have strengthened security measures or reduced vulnerabilities in previous positions, illustrating your impact on the organization’s overall security posture.

- •Designed and implemented AWS security policies, reducing security breaches by 30% year over year.

- •Conducted over 25 vulnerability assessments leading to significant risk mitigation across cloud-based applications.

- •Collaborated with development teams to integrate security best practices, improving secure app deployment times by 20%.

- •Analyzed security events weekly, ensuring timely responses to potential threats, resulting in IT intervention within 2 hours.

- •Maintained compliance documentation for ISO 27001 certification, contributing to successful audit completions.

- •Provided expert guidance on AWS IAM, WAF, and KMS, enhancing overall cloud security by establishing stronger policies.

- •Led a team in conducting monthly penetration tests, resulting in the identification and correction of security vulnerabilities.

- •Integrated robust Amazon GuardDuty configurations to monitor for security anomalies, significantly boosting intrusion detection.

- •Developed automated AWS Lambda scripts for daily security audits, reducing manual effort by 40%.

- •Collaborated across departments to refine data encryption protocols, achieving a 50% enhancement in data protection.

- •Provided extensive training on AWS Shield, increasing team capabilities to effectively counteract DDoS threats.

- •Implemented security frameworks like NIST and OWASP across multiple cloud projects, reinforcing regulatory adherence.

- •Orchestrated comprehensive security incident responses, minimizing damage and recovery costs effectively.

- •Managed identity and access management protocols to tighten access control, cutting unauthorized access instances by 15%.

- •Conducted weekly training sessions to increase organization-wide awareness on cloud security best practices.

- •Developed security strategies for cloud services that bolstered infrastructure resilience by 25%.

- •Collaboratively introduced advanced threat intelligence tools, resulting in improved threat predictive analytics.

- •Facilitated cross-functional workshops to instill a robust security culture, enhancing compliance with industry standards.

Certified AWS Data Systems Engineer resume sample

When applying for a Certified AWS Data Systems Engineer role, it's essential to highlight your experience in data modeling and database design. Showcase your knowledge in cloud architectures and frameworks. If you have certifications in AWS Database Services or Data Engineering, mention these and state how they add to your expertise. Concrete examples of projects where you improved data flow efficiency or reduced costs will strengthen your application. Use a 'challenge-solution-impact' format to clearly demonstrate the value you've brought to past roles.

- •Developed a scalable data pipeline on AWS, reducing data processing time by 30% which enhanced server performance.

- •Led a team of engineers to design data architectures, increasing data integration efficiency by 25%.

- •Implemented robust ETL processes using AWS Glue, resulting in 20% faster data transformation and storage.

- •Collaborated on cross-functional teams to resolve complex data challenges, significantly improving client satisfaction scores.

- •Introduced a new data compliance framework, ensuring industry-standard data security and integrity across the cloud.

- •Enhanced system monitoring processes, identifying and resolving 90% of data-related issues before impacting stakeholders.

- •Implemented data warehousing solutions utilizing Amazon Redshift, optimizing query performance by 50%.

- •Reduced ETL processing costs by 20% by optimizing AWS Lambda functions for data transformation tasks.

- •Assessed and adjusted data modeling practices, improving data retrieval times by 35% in key client applications.

- •Proactively monitored system performance, reducing downtime incidents related to data storage by 40%.

- •Aligned data processing architecture with latest AWS trends, providing strategic insights for system enhancements.

- •Designed cloud-native data solutions, increasing system availability by 15% across client platforms.

- •Introduced new NoSQL database structures, improving data access speeds by 20% for large-scale applications.

- •Implemented AWS-based data security measures, successfully maintaining zero data breaches over a two-year period.

- •Facilitated cross-departmental data integration projects, enhancing overall data access by 30% for internal users.

- •Developed optimized SQL queries, cutting down data retrieval time by 40% for client applications.

- •Integrated new data storage solutions with Amazon S3 resulting in improved data backup and recovery processes.

- •Reduced data processing costs by 15% through efficient use of AWS resources and services.

- •Managed data transformation tasks, improving accuracy and speed of client reports by 25%.

Crafting the perfect resume as an AWS data engineer is much like designing a well-oiled machine in the cloud, where every detail matters. Your skills in AWS services and data management set you apart, so it's essential to effectively translate these into a standout resume. You need to clearly demonstrate your talent for seamless data migration and cloud infrastructure management, reflecting how you solve complex data challenges.

When you sit down to write your resume, aligning your technical strengths with the job's requirements can feel overwhelming. It’s not just about listing your experience; it’s about making your resume shine amid a stack of others. Using a resume template can be a game-changer in this process, enabling you to organize your achievements and skills in a way that makes your AWS expertise pop. Consider these resume templates, which can help you structure your information effectively.

A well-crafted resume does more than highlight your technical skills; it also showcases your problem-solving abilities and adaptability in fast-paced environments. Your aim should be to make a strong impression right from the start. As you approach this task, remember that the journey to your next career step as an AWS data engineer begins with a resume that's as precise and dynamic as the technology you work with every day.

Key Takeaways

- When writing an AWS Data Engineer resume, emphasize your skills in AWS cloud services and data management to stand out by aligning technical strengths with job requirements.

- Use resume templates to structure your achievements and skills, highlighting problem-solving abilities and adaptability in fast-paced environments.

- Focus on a clean, simple layout with modern fonts and always save the resume as a PDF to maintain its appearance across different devices.

- Your experience section should feature quantifiable achievements to demonstrate your impact in past roles, tailored to the job description using action words and metrics.

- Strategically highlight both hard skills (like AWS tools, Python, and SQL) and soft skills (such as problem-solving and teamwork) to present a comprehensive view of your qualifications.

What to focus on when writing your AWS data engineer resume

Your AWS Data Engineer resume should clearly communicate your expertise with AWS cloud services and your skills in managing and optimizing data solutions. By doing this, you show your technical abilities, experience, and how you add value to a team, focusing on your capability to design scalable data pipelines and improve data processing—highlighting these areas is crucial in making your profile stand out.

How to structure your AWS data engineer resume

- Contact Information: your full name, phone number, email address, and LinkedIn profile. Ensure your email address is professional to make a solid impression—presenting clear and correct contact information is essential as it sets the stage for effective communication with recruiters.

- Professional Summary: summarize your AWS data engineering background while spotlighting key achievements. It's beneficial to mention specific AWS services like Redshift, S3, or Lambda to underline your hands-on experience—this summary should capture not just what you've done, but how it aligns with the needs of employers, setting the context for the rest of your resume.

- Skills: list your skills directly related to AWS data engineering. Be sure to include AWS cloud services, data warehousing, ETL processes, SQL, and relevant programming languages such as Python, connecting each to your past experiences—detailing these skills paints a picture of your technical capabilities, acting as the backbone of your professional persona.

- Work Experience: describe previous roles in data engineering, focusing on your contributions, the tools you've mastered, and successes in optimizing data pipelines and managing large data sets. This section should build a clear narrative of your professional journey—effective storytelling here can turn your experiences into compelling evidence of your suitability for the role.

- Education: list your highest degree and relevant coursework, along with any AWS-related certifications like AWS Certified Data Analytics or AWS Certified Solutions Architect. This highlights your commitment to learning and growth—education details help reinforce your technical foundation and readiness for complex tasks in data engineering.

- Certifications: showcase any AWS-related certifications separately to emphasize your dedication to professional development and expertise in AWS technologies. Although discussed in separate sections, certifications can greatly complement your skills and work experience sections by demonstrating continuous learning. Additionally, you might want to add sections for projects, awards, or volunteer work to demonstrate your passion and continuous learning in the field, knitting together a complete picture of your professional self. Below, we'll cover each section in more detail and discuss how to structure your resume for maximum impact.

Which resume format to choose

As an AWS data engineer, crafting a well-formatted resume is crucial for making a strong impression on potential employers. Start with a clean and simple layout, which allows your technical skills and achievements to take center stage. Your resume needs to be easy to read and visually appealing, so selecting the right font is an important step. Consider using modern options like Lato, Montserrat, or Raleway. These fonts give your resume a contemporary feel, helping it stand out from the crowd without overshadowing the content.

Saving your resume as a PDF is essential; this file format ensures your resume maintains its intended look across different devices and platforms. It’s a small step that has a big impact on professionalism. When formatting the document, remember to use standard 1-inch margins. This choice offers a balanced appearance and provides enough white space, making it easier for hiring managers to quickly digest key information.

By focusing on these elements—design, fonts, file type, and layout—you'll create a resume that effectively highlights your AWS data engineering skills. This targeted approach helps ensure that your qualifications shine, making you a compelling candidate in the competitive job market.

How to write a quantifiable resume experience section

Your AWS Data Engineer resume should highlight achievements that directly align with the job ad, emphasizing quantifiable results to show your impact in previous roles. Start with your most recent experiences and work back to cover the past 10-15 years, highlighting job titles that demonstrate growth or relevant responsibilities. By incorporating keywords from the job ad, you tailor each entry to emphasize metrics that showcase success. Use action words like "optimized," "implemented," and "automated" to make your contributions clear and impactful.

- •Optimized data pipeline, cutting processing time by 40% using AWS S3 and Lambda.

- •Implemented a scalable ETL pipeline with AWS Glue, reducing costs by 30%.

- •Automated data quality checks via Amazon Redshift, boosting accuracy by 25%.

- •Led a project to enhance data accessibility by 50% using AWS Kinesis.

This experience section effectively highlights your impact by focusing on measurable accomplishments that resonate with the technical demands of an AWS Data Engineer role. Each bullet is crafted with strong action words and specifies the outcomes of your efforts, making it easy for recruiters to grasp your ability to enhance processes and achieve significant results. Tailoring this content to the job ad strengthens your position as a compelling candidate, seamlessly connecting your past successes to the role you're pursuing.

Training and Development Focused resume experience section

A training-focused AWS Data Engineer resume experience section should effectively communicate your role in enhancing team skills and promoting a culture of learning. Start by describing specific projects where you took the lead in developing training modules or initiatives. Highlight your ability to make learning engaging, such as conducting workshops on AWS services that received high satisfaction ratings.

Illustrate the tangible outcomes of your efforts, like improved project delivery rates or increases in certification achievements. Emphasize your role in collaborating with multiple departments to shape learning paths that align with broader company goals. By implementing cloud-based solutions, you likely reduced costs while boosting employee engagement. This cohesive approach demonstrates your ability to inspire continuous learning and development in a technical environment.

Data Engineer

Tech Solutions Inc.

January 2020 - Present

- Created dynamic AWS training modules that improved team skills and accelerated project delivery by 20%.

- Led energetic workshops on AWS services, achieving a 95% satisfaction rate among participants.

- Collaborated across teams to develop learning paths, increasing AWS certification rates by 30%.

- Introduced a cloud-based training system, reducing costs by 25% while increasing user participation.

Innovation-Focused resume experience section

An innovation-focused AWS Data Engineer resume experience section should emphasize your ability to think creatively and drive impactful solutions. By illustrating how your unique ideas have led to process improvements and problem resolution, you can convey your innovative approach effectively. Focus on specific projects where you’ve made a tangible difference, highlighting your skillful use of AWS tools to bring these novel solutions to life.

Make sure to showcase not just your technical prowess but also your creative thinking in advancing progress. Use specific examples, backed by metrics if possible, to underscore your achievements. Carefully construct your bullet points to highlight your role and initiatives in driving efficiency and progress through new technologies or methods.

AWS Data Engineer

NextGen Analytics

June 2020 - Present

- Led the development of a real-time data processing pipeline using AWS Kinesis, cutting data latency by 60%.

- Designed and implemented a scalable data lake with AWS S3, enabling on-demand analytics and cutting storage costs by 30%.

- Deployed machine learning models with AWS SageMaker, boosting predictive analysis accuracy by 20%.

- Automated ETL processes via AWS Lambda, enhancing data accuracy and cutting manual workload by 40%.

Achievement-Focused resume experience section

A data-focused AWS Data Engineer resume experience section should highlight your key accomplishments and the impact of your work, while clearly connecting each achievement to relevant skills. Start by emphasizing significant projects where you made a difference, detailing any challenges you faced along with the actions you took to overcome them. Use active verbs to convey your contribution and specify the technology or tools you applied, ensuring each point aligns with sought-after skills like coding, collaboration, or problem-solving.

Maintain clarity and conciseness by steering clear of jargon that might obscure your achievements. Instead, utilize straightforward language that effectively articulates your contributions and the benefits they brought to the organization. By incorporating quantifiable outcomes such as statistics or percentages, you can make your accomplishments more compelling and easy to understand.

AWS Data Engineer

Tech Innovators Inc.

June 2020 - Present

- Led migration of over 500TB of data to AWS S3, improving data accessibility and cutting storage costs by 25%, which demonstrated a clear impact on budget efficiency

- Crafted a streamlined ETL process using AWS Glue, boosting data processing efficiency by 30% and showcasing innovation in data handling

- Enhanced data security by implementing protocols with AWS IAM, which strengthened protection measures and safeguarded sensitive information

- Worked closely with cross-functional teams to design cloud solutions, effectively reducing project delivery time by 40% through collaborative and efficient solution design

Technology-Focused resume experience section

A technology-focused AWS Data Engineer resume experience section should effectively showcase how your technical skills drive company success. Begin by highlighting projects you’ve contributed to, using clear metrics to underscore your impact, like enhancing process efficiency or reducing costs. Include references to AWS tools, such as Redshift and Lambda, to provide context for your work. It's important to demonstrate your ability to work collaboratively with teams and stakeholders, showcasing your dynamics in teamwork.

Focus on framing your work in terms of outcomes, using action-oriented language to vividly convey your achievements. Connect any challenges to the innovative strategies you used to overcome them, emphasizing your problem-solving skills. Ensure each bullet point aligns with the broader narrative of demonstrating your technical expertise and delivering measurable results, making your AWS Data Engineer experience compelling and cohesive.

AWS Data Engineer

Tech Innovations Inc.

June 2020 - Present

- Used AWS Lambda and S3 to streamline data pipelines, slashing data processing time by 30%.

- Partnered with cross-functional teams to build a scalable data warehouse with Redshift, boosting data availability for various stakeholders.

- Enhanced ETL processes, achieving a 40% increase in data extraction and transformation efficiency.

- Created automated monitoring tools, reducing system downtime and improving operational reliability.

Write your AWS data engineer resume summary section

A results-focused AWS Data Engineer resume summary should introduce your professional story seamlessly. This section is your chance to quickly convey your career highlights and set a strong tone for the rest of your resume. To make your summary shine, spotlight your key achievements and skills that align with the job you're targeting. Share your expertise in managing data solutions with AWS cloud services, and highlight instances where you've tackled challenges, optimized workflows, or reduced costs effectively. Here's a concise example:

This example stands out by connecting your technical strengths with tangible results, portraying a clear image of your capabilities. Describe yourself using action-oriented phrases that showcase measurable successes and emphasize your professional strengths.

Understanding the differences between resume sections is essential for clarity. A resume summary offers a snapshot of your skills and achievements; it differs from a resume objective, which outlines your career aspirations and how they align with the role, usually aimed at candidates with less experience. On the other hand, a resume profile mixes elements of both a summary and an objective. Meanwhile, a summary of qualifications highlights key skills in bullet points for quick reading. By tailoring your summary, you ensure it aligns well with the job you're applying for, creating a clear connection between your experience and the job requirements.

Listing your AWS data engineer skills on your resume

A skills-focused AWS Data Engineer resume should effectively highlight your technical and interpersonal abilities, whether through a dedicated skills section or woven into your experience and summary. This strategy ensures your most relevant abilities stand out in context, presenting a well-rounded picture of your expertise.

Your strengths, such as communication and leadership, are vital soft skills that highlight your interpersonal qualities. Alongside, hard skills cover the technical side of things, like proficiency with AWS services or data modeling expertise. Together, these skills and strengths serve as powerful resume keywords, making your resume more appealing to employers and applicant tracking systems.

When you list skills in a standalone section, they become easy to spot. Using industry-relevant terms ensures your qualifications align with typical job descriptions, swiftly drawing attention to your capabilities.

Example JSON skills section:

This example is effective because it lists a mix of essential skills directly tied to the AWS Data Engineer role. It remains concise yet comprehensive, ensuring clarity without inundating the reader.

Best hard skills to feature on your aws data engineer resume

Your hard skills reveal your technical expertise and your ability to manage specific job tasks. As an AWS Data Engineer, these should focus on your proficiency with AWS tools and data management, showcasing your capacity to manage, analyze, and protect data effectively. Important skills include:

Hard Skills

- AWS Glue

- AWS Lambda

- AWS Redshift

- Data Migration

- ETL (Extract, Transform, Load) Processes

- Data Warehousing

- Big Data Technologies

- Python Programming

- SQL Queries

- Data Modeling

- AWS Data Pipeline

- Apache Spark

- Hadoop Ecosystem

- CloudWatch

- IAM Policies

Best soft skills to feature on your aws data engineer resume

Your soft skills emphasize how you interact with others and solve problems. For an AWS Data Engineer, these should highlight your ability to collaborate and think critically in dynamic environments. They illustrate your knack for pushing projects forward. Focus on these key areas:

Soft Skills

- Problem-Solving

- Team Collaboration

- Communication

- Time Management

- Attention to Detail

- Adaptability

- Analytical Thinking

- Creativity

- Leadership

- Decision-Making

- Critical Thinking

- Active Listening

- Resilience

- Conflict Resolution

- Project Management

How to include your education on your resume

An education section is an important part of your AWS Data Engineer resume. It helps employers see your qualifications quickly. Ensure the education is tailored to the job; leave out what is not relevant. If your GPA is above 3.5, it can be beneficial to include it. Note cum laude honors clearly. When listing your degree, include the full title, school, and dates attended.

Including GPA: If it's strong, list alongside the degree. Cum laude recognition: add it after the degree name. For the degree, write the full name such as "Bachelor of Science in Computer Science."

Here's an example of what not to do:

Here's how it should look:

The second example is strong because it includes a relevant degree, cum laude honors, and a solid GPA. This helps to demonstrate not only knowledge but also academic achievement. It focuses on education that fits the AWS Data Engineer role, making your resume more appealing to employers.

How to include AWS data engineer certificates on your resume

A certificates section on your AWS Data Engineer resume is a crucial element. It highlights your technical skills and expertise. List the name of each certification. Include the date when you attained the certificate. Add the issuing organization to give it credibility. You can place this section prominently in your resume header to immediately catch the recruiter's eye. For example:

Here is a standalone certificates section example. This example is well-detailed and neatly formatted:

[here was the JSON object 2]

This example works well because it lists relevant certifications for an AWS Data Engineer role. The certifications from major providers highlight versatility. Each entry has the title and issuing organization, ensuring clarity and authenticity. This layout makes it easy for recruiters to see your qualifications at a glance. It shows you have up-to-date knowledge and relevant skills in the field. This enhanced focus can give you an edge in a competitive job market.

Extra sections to include in your AWS data engineer resume

In today's competitive job market, an impressive resume can set you apart from the crowd. As an AWS Data Engineer, highlighting your technical skills and experience is crucial, but adding sections that showcase your personality and extracurricular involvement can be just as impactful.

- Language section — Indicate your fluency in languages like Python, SQL, and Java, adding value to your technical toolkit. This showcases your ability to handle diverse coding tasks and interact with multicultural teams.

- Hobbies and interests section — Highlight hobbies like building DIY gadgets or participating in hackathons to show your enthusiasm for tech outside of work. Sharing interests also helps you connect on a personal level with potential employers.

- Volunteer work section — Describe your experience volunteering at local community tech workshops. This demonstrates your commitment to using your skills for social good and helping others.

- Books section — Mention a few influential books you have read on cloud computing or data engineering. This reflects your commitment to ongoing learning and staying updated with industry trends.

Including these additional sections on your resume not only rounds out your professional image but also gives your potential employer a better understanding of your character and passions. Together with your technical experience, these elements paint a comprehensive picture of who you are as both a professional and a person.

In Conclusion

In conclusion, crafting a standout resume as an AWS Data Engineer is a strategic process that blends your technical expertise with the ability to communicate your skills effectively. By focusing on areas such as cloud services, data management, and problem-solving, you'll create a compelling narrative of your professional journey. Remember to highlight key achievements with quantifiable outcomes, as they illustrate your value to potential employers. Utilizing a clean and modern resume format, with an emphasis on clarity and organization, ensures that your qualifications are immediately apparent.

Including your education and certifications is equally important, providing evidence of your formal training and commitment to continuous learning. Tailoring your resume to the specific role by incorporating relevant keywords will make it more appealing to applicant tracking systems and hiring managers alike. Furthermore, don't overlook the power of soft skills, as they demonstrate your ability to communicate and collaborate in diverse environments.

Enhancing your resume with additional sections like languages, hobbies, and volunteer work offers a well-rounded view of your personal interests and passions, adding depth to your professional persona. Together, these elements create a comprehensive and impactful resume. With a refined and polished document, you'll confidently take the first step toward securing your desired role as an AWS Data Engineer.

Related Articles

Continue Reading

Check more recommended readings to get the job of your dreams.

Resume

Resources

Tools

© 2025. All rights reserved.

Made with love by people who care.