GCP Data Engineer Resume Examples

Jul 18, 2024

|

12 min read

Crafting your GCP data engineer resume with cloud cred: how you can stand out and "byte" into your dream job.

Rated by 348 people

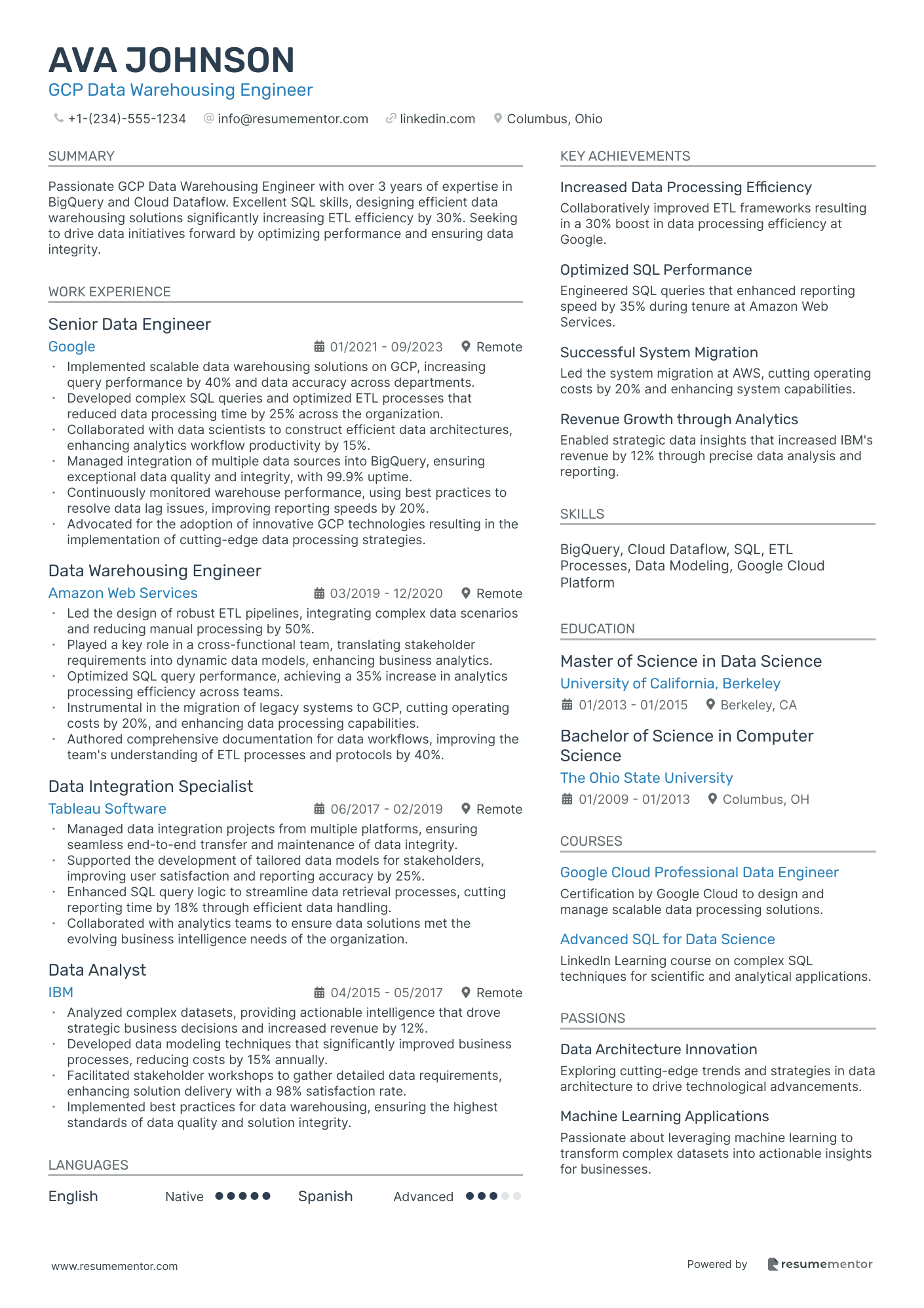

GCP Data Warehousing Engineer

GCP Big Data Engineer

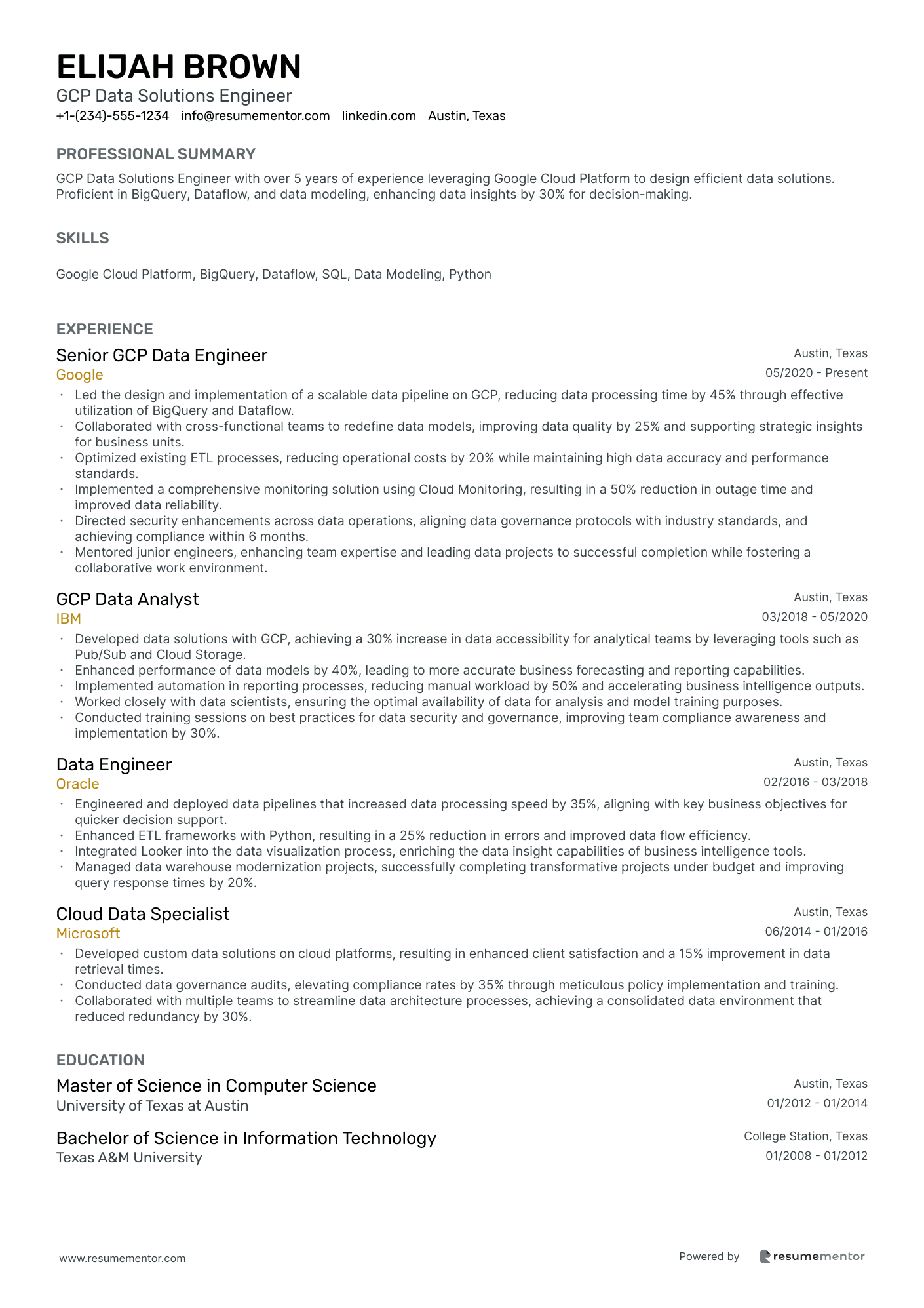

GCP Data Solutions Engineer

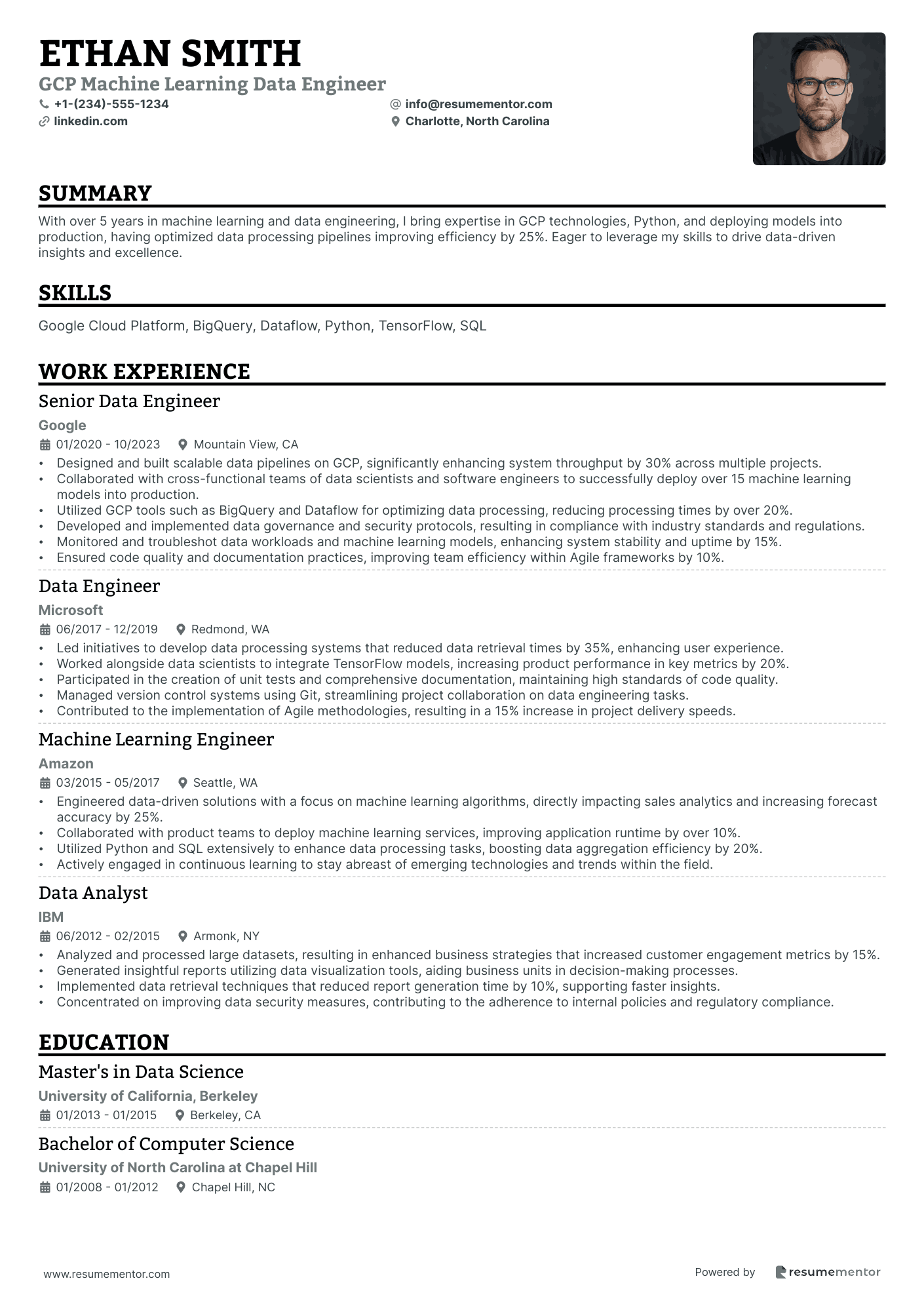

GCP Machine Learning Data Engineer

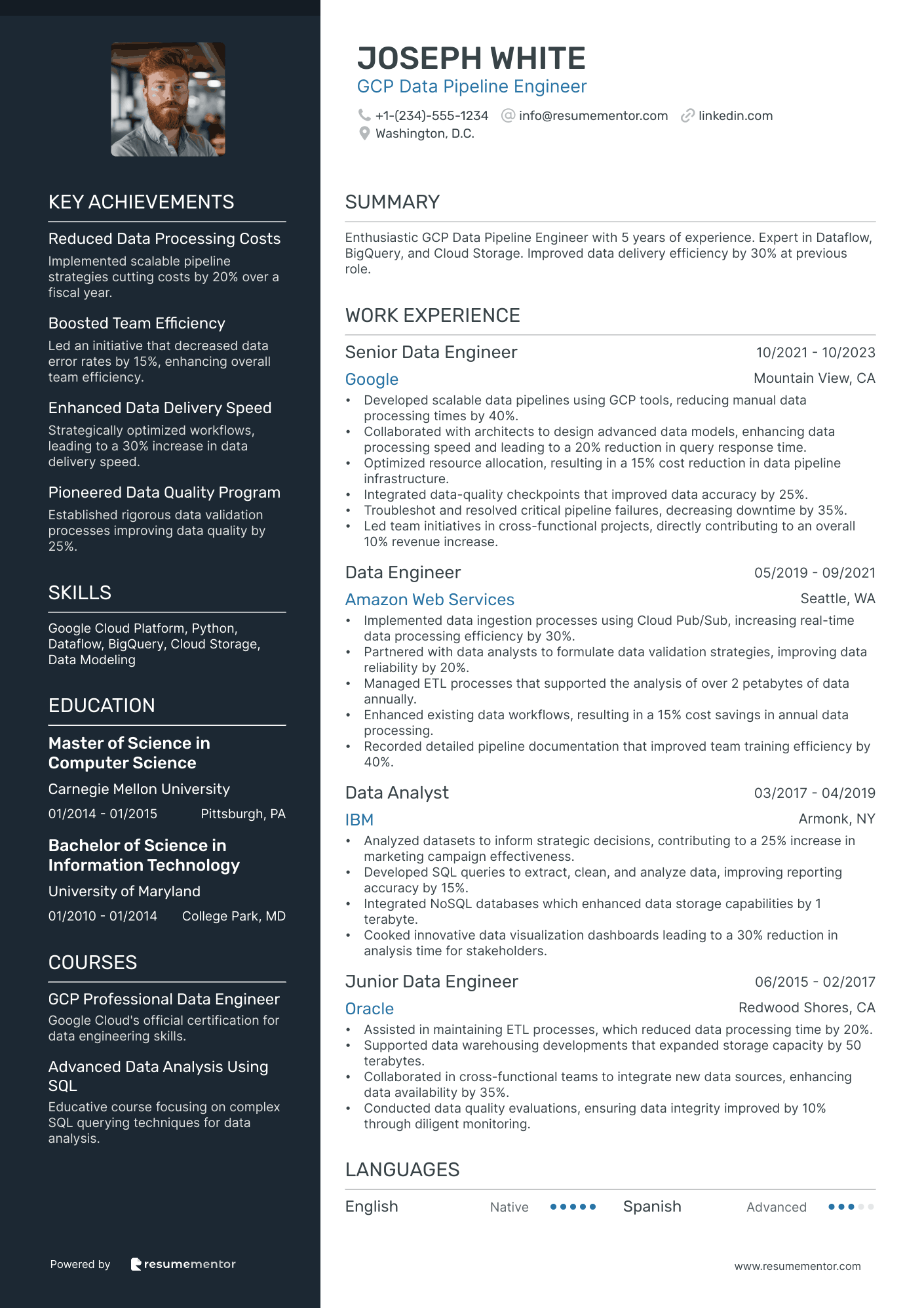

GCP Data Pipeline Engineer

GCP Cloud Data Architect

GCP Data Infrastructure Engineer

GCP Data Migration Engineer

GCP Data Security Engineer

GCP Data Analytics Engineer

GCP Data Warehousing Engineer resume sample

- •Implemented scalable data warehousing solutions on GCP, increasing query performance by 40% and data accuracy across departments.

- •Developed complex SQL queries and optimized ETL processes that reduced data processing time by 25% across the organization.

- •Collaborated with data scientists to construct efficient data architectures, enhancing analytics workflow productivity by 15%.

- •Managed integration of multiple data sources into BigQuery, ensuring exceptional data quality and integrity, with 99.9% uptime.

- •Continuously monitored warehouse performance, using best practices to resolve data lag issues, improving reporting speeds by 20%.

- •Advocated for the adoption of innovative GCP technologies resulting in the implementation of cutting-edge data processing strategies.

- •Led the design of robust ETL pipelines, integrating complex data scenarios and reducing manual processing by 50%.

- •Played a key role in a cross-functional team, translating stakeholder requirements into dynamic data models, enhancing business analytics.

- •Optimized SQL query performance, achieving a 35% increase in analytics processing efficiency across teams.

- •Instrumental in the migration of legacy systems to GCP, cutting operating costs by 20%, and enhancing data processing capabilities.

- •Authored comprehensive documentation for data workflows, improving the team's understanding of ETL processes and protocols by 40%.

- •Managed data integration projects from multiple platforms, ensuring seamless end-to-end transfer and maintenance of data integrity.

- •Supported the development of tailored data models for stakeholders, improving user satisfaction and reporting accuracy by 25%.

- •Enhanced SQL query logic to streamline data retrieval processes, cutting reporting time by 18% through efficient data handling.

- •Collaborated with analytics teams to ensure data solutions met the evolving business intelligence needs of the organization.

- •Analyzed complex datasets, providing actionable intelligence that drove strategic business decisions and increased revenue by 12%.

- •Developed data modeling techniques that significantly improved business processes, reducing costs by 15% annually.

- •Facilitated stakeholder workshops to gather detailed data requirements, enhancing solution delivery with a 98% satisfaction rate.

- •Implemented best practices for data warehousing, ensuring the highest standards of data quality and solution integrity.

GCP Big Data Engineer resume sample

- •Designed an end-to-end data pipeline using BigQuery and Dataflow, reducing query run-time by 30% and improving processing efficiency.

- •Collaborated with 5 cross-functional teams to align data models with business objectives, resulting in a 25% increase in operational efficiency.

- •Led an initiative to implement data validation processes, improving data quality by 40% and reducing error rates substantially.

- •Optimized ETL workflows that enhanced data retrieval speeds by 40%, supporting faster decision-making processes.

- •Instrumental in a project reducing data complexity through innovative data modelling, maintaining data integrity and quality.

- •Achieved high data processing uptime and reliability, minimizing downtime to less than 2% annually.

- •Implemented scalable data solutions using cloud technologies, enhancing scalability and flexibility by 50% through GCP services.

- •Developed data models for real-time analytics, improving data-driven decision making with a 10% increase in insights derived.

- •Collaborated with data scientists to translate complex requirements into technical specifications, enhancing project accuracy.

- •Increased data processing speed by 35% by refining workflows and adopting innovative big data strategies.

- •Augmented data governance by automating auditing processes, which significantly improved compliance and data accuracy.

- •Streamlined ETL processes that improved data processing times by 20% and reduced operational costs through GCP integration.

- •Enhanced the performance of existing data models, thus improving information retrieval time by 15%.

- •Implemented a data auditing process which resulted in a significant improvement in data integrity and accuracy.

- •Managed and optimized cloud-based data storage solutions, resulting in higher reliability and efficiency overall.

- •Developed robust SQL and NoSQL database systems supporting analytics and reporting, which increased data accessibility by 30%.

- •Collaborated with stakeholders to define project scope and technical requirements, improving client satisfaction by 20%.

- •Implemented solutions for data processing challenges, which resulted in a 10% decrease in processing errors.

- •Managed data extraction and transformation ensuring data quality, supporting better analytics and business insights.

GCP Data Solutions Engineer resume sample

- •Led the design and implementation of a scalable data pipeline on GCP, reducing data processing time by 45% through effective utilization of BigQuery and Dataflow.

- •Collaborated with cross-functional teams to redefine data models, improving data quality by 25% and supporting strategic insights for business units.

- •Optimized existing ETL processes, reducing operational costs by 20% while maintaining high data accuracy and performance standards.

- •Implemented a comprehensive monitoring solution using Cloud Monitoring, resulting in a 50% reduction in outage time and improved data reliability.

- •Directed security enhancements across data operations, aligning data governance protocols with industry standards, and achieving compliance within 6 months.

- •Mentored junior engineers, enhancing team expertise and leading data projects to successful completion while fostering a collaborative work environment.

- •Developed data solutions with GCP, achieving a 30% increase in data accessibility for analytical teams by leveraging tools such as Pub/Sub and Cloud Storage.

- •Enhanced performance of data models by 40%, leading to more accurate business forecasting and reporting capabilities.

- •Implemented automation in reporting processes, reducing manual workload by 50% and accelerating business intelligence outputs.

- •Worked closely with data scientists, ensuring the optimal availability of data for analysis and model training purposes.

- •Conducted training sessions on best practices for data security and governance, improving team compliance awareness and implementation by 30%.

- •Engineered and deployed data pipelines that increased data processing speed by 35%, aligning with key business objectives for quicker decision support.

- •Enhanced ETL frameworks with Python, resulting in a 25% reduction in errors and improved data flow efficiency.

- •Integrated Looker into the data visualization process, enriching the data insight capabilities of business intelligence tools.

- •Managed data warehouse modernization projects, successfully completing transformative projects under budget and improving query response times by 20%.

- •Developed custom data solutions on cloud platforms, resulting in enhanced client satisfaction and a 15% improvement in data retrieval times.

- •Conducted data governance audits, elevating compliance rates by 35% through meticulous policy implementation and training.

- •Collaborated with multiple teams to streamline data architecture processes, achieving a consolidated data environment that reduced redundancy by 30%.

GCP Machine Learning Data Engineer resume sample

- •Designed and built scalable data pipelines on GCP, significantly enhancing system throughput by 30% across multiple projects.

- •Collaborated with cross-functional teams of data scientists and software engineers to successfully deploy over 15 machine learning models into production.

- •Utilized GCP tools such as BigQuery and Dataflow for optimizing data processing, reducing processing times by over 20%.

- •Developed and implemented data governance and security protocols, resulting in compliance with industry standards and regulations.

- •Monitored and troubleshot data workloads and machine learning models, enhancing system stability and uptime by 15%.

- •Ensured code quality and documentation practices, improving team efficiency within Agile frameworks by 10%.

- •Led initiatives to develop data processing systems that reduced data retrieval times by 35%, enhancing user experience.

- •Worked alongside data scientists to integrate TensorFlow models, increasing product performance in key metrics by 20%.

- •Participated in the creation of unit tests and comprehensive documentation, maintaining high standards of code quality.

- •Managed version control systems using Git, streamlining project collaboration on data engineering tasks.

- •Contributed to the implementation of Agile methodologies, resulting in a 15% increase in project delivery speeds.

- •Engineered data-driven solutions with a focus on machine learning algorithms, directly impacting sales analytics and increasing forecast accuracy by 25%.

- •Collaborated with product teams to deploy machine learning services, improving application runtime by over 10%.

- •Utilized Python and SQL extensively to enhance data processing tasks, boosting data aggregation efficiency by 20%.

- •Actively engaged in continuous learning to stay abreast of emerging technologies and trends within the field.

- •Analyzed and processed large datasets, resulting in enhanced business strategies that increased customer engagement metrics by 15%.

- •Generated insightful reports utilizing data visualization tools, aiding business units in decision-making processes.

- •Implemented data retrieval techniques that reduced report generation time by 10%, supporting faster insights.

- •Concentrated on improving data security measures, contributing to the adherence to internal policies and regulatory compliance.

GCP Data Pipeline Engineer resume sample

- •Developed scalable data pipelines using GCP tools, reducing manual data processing times by 40%.

- •Collaborated with architects to design advanced data models, enhancing data processing speed and leading to a 20% reduction in query response time.

- •Optimized resource allocation, resulting in a 15% cost reduction in data pipeline infrastructure.

- •Integrated data-quality checkpoints that improved data accuracy by 25%.

- •Troubleshot and resolved critical pipeline failures, decreasing downtime by 35%.

- •Led team initiatives in cross-functional projects, directly contributing to an overall 10% revenue increase.

- •Implemented data ingestion processes using Cloud Pub/Sub, increasing real-time data processing efficiency by 30%.

- •Partnered with data analysts to formulate data validation strategies, improving data reliability by 20%.

- •Managed ETL processes that supported the analysis of over 2 petabytes of data annually.

- •Enhanced existing data workflows, resulting in a 15% cost savings in annual data processing.

- •Recorded detailed pipeline documentation that improved team training efficiency by 40%.

- •Analyzed datasets to inform strategic decisions, contributing to a 25% increase in marketing campaign effectiveness.

- •Developed SQL queries to extract, clean, and analyze data, improving reporting accuracy by 15%.

- •Integrated NoSQL databases which enhanced data storage capabilities by 1 terabyte.

- •Cooked innovative data visualization dashboards leading to a 30% reduction in analysis time for stakeholders.

- •Assisted in maintaining ETL processes, which reduced data processing time by 20%.

- •Supported data warehousing developments that expanded storage capacity by 50 terabytes.

- •Collaborated in cross-functional teams to integrate new data sources, enhancing data availability by 35%.

- •Conducted data quality evaluations, ensuring data integrity improved by 10% through diligent monitoring.

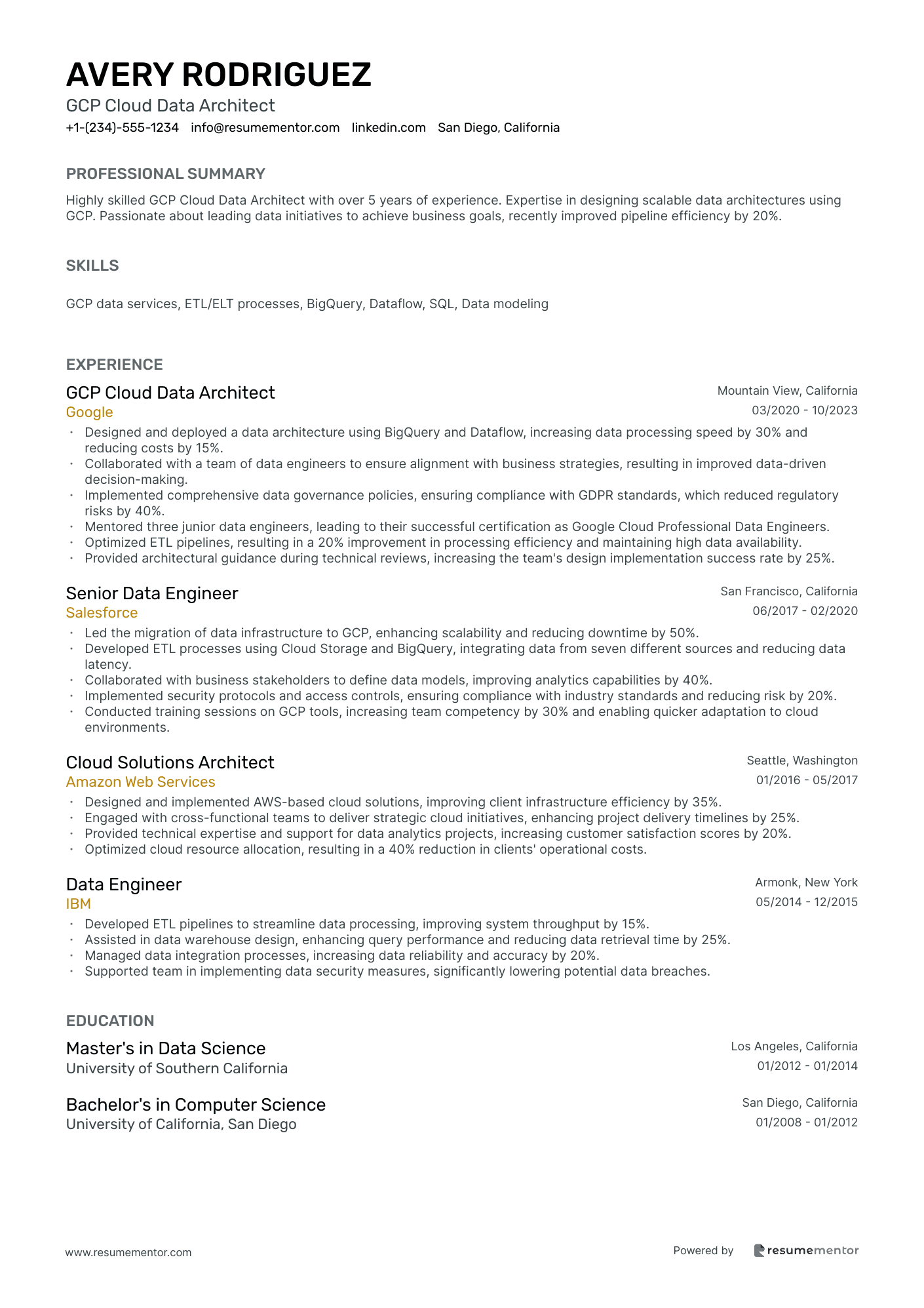

GCP Cloud Data Architect resume sample

- •Designed and deployed a data architecture using BigQuery and Dataflow, increasing data processing speed by 30% and reducing costs by 15%.

- •Collaborated with a team of data engineers to ensure alignment with business strategies, resulting in improved data-driven decision-making.

- •Implemented comprehensive data governance policies, ensuring compliance with GDPR standards, which reduced regulatory risks by 40%.

- •Mentored three junior data engineers, leading to their successful certification as Google Cloud Professional Data Engineers.

- •Optimized ETL pipelines, resulting in a 20% improvement in processing efficiency and maintaining high data availability.

- •Provided architectural guidance during technical reviews, increasing the team's design implementation success rate by 25%.

- •Led the migration of data infrastructure to GCP, enhancing scalability and reducing downtime by 50%.

- •Developed ETL processes using Cloud Storage and BigQuery, integrating data from seven different sources and reducing data latency.

- •Collaborated with business stakeholders to define data models, improving analytics capabilities by 40%.

- •Implemented security protocols and access controls, ensuring compliance with industry standards and reducing risk by 20%.

- •Conducted training sessions on GCP tools, increasing team competency by 30% and enabling quicker adaptation to cloud environments.

- •Designed and implemented AWS-based cloud solutions, improving client infrastructure efficiency by 35%.

- •Engaged with cross-functional teams to deliver strategic cloud initiatives, enhancing project delivery timelines by 25%.

- •Provided technical expertise and support for data analytics projects, increasing customer satisfaction scores by 20%.

- •Optimized cloud resource allocation, resulting in a 40% reduction in clients' operational costs.

- •Developed ETL pipelines to streamline data processing, improving system throughput by 15%.

- •Assisted in data warehouse design, enhancing query performance and reducing data retrieval time by 25%.

- •Managed data integration processes, increasing data reliability and accuracy by 20%.

- •Supported team in implementing data security measures, significantly lowering potential data breaches.

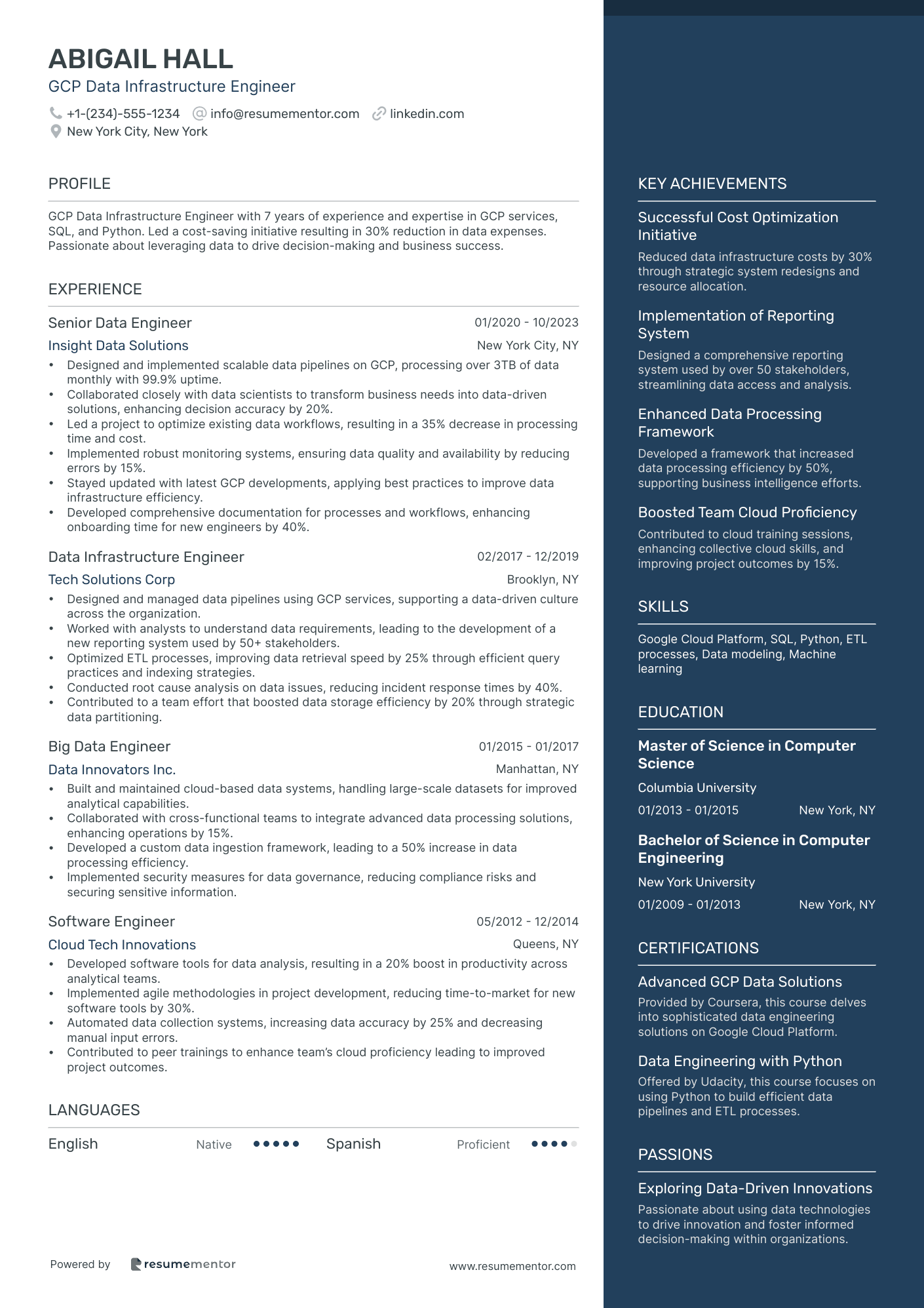

GCP Data Infrastructure Engineer resume sample

- •Designed and implemented scalable data pipelines on GCP, processing over 3TB of data monthly with 99.9% uptime.

- •Collaborated closely with data scientists to transform business needs into data-driven solutions, enhancing decision accuracy by 20%.

- •Led a project to optimize existing data workflows, resulting in a 35% decrease in processing time and cost.

- •Implemented robust monitoring systems, ensuring data quality and availability by reducing errors by 15%.

- •Stayed updated with latest GCP developments, applying best practices to improve data infrastructure efficiency.

- •Developed comprehensive documentation for processes and workflows, enhancing onboarding time for new engineers by 40%.

- •Designed and managed data pipelines using GCP services, supporting a data-driven culture across the organization.

- •Worked with analysts to understand data requirements, leading to the development of a new reporting system used by 50+ stakeholders.

- •Optimized ETL processes, improving data retrieval speed by 25% through efficient query practices and indexing strategies.

- •Conducted root cause analysis on data issues, reducing incident response times by 40%.

- •Contributed to a team effort that boosted data storage efficiency by 20% through strategic data partitioning.

- •Built and maintained cloud-based data systems, handling large-scale datasets for improved analytical capabilities.

- •Collaborated with cross-functional teams to integrate advanced data processing solutions, enhancing operations by 15%.

- •Developed a custom data ingestion framework, leading to a 50% increase in data processing efficiency.

- •Implemented security measures for data governance, reducing compliance risks and securing sensitive information.

- •Developed software tools for data analysis, resulting in a 20% boost in productivity across analytical teams.

- •Implemented agile methodologies in project development, reducing time-to-market for new software tools by 30%.

- •Automated data collection systems, increasing data accuracy by 25% and decreasing manual input errors.

- •Contributed to peer trainings to enhance team’s cloud proficiency leading to improved project outcomes.

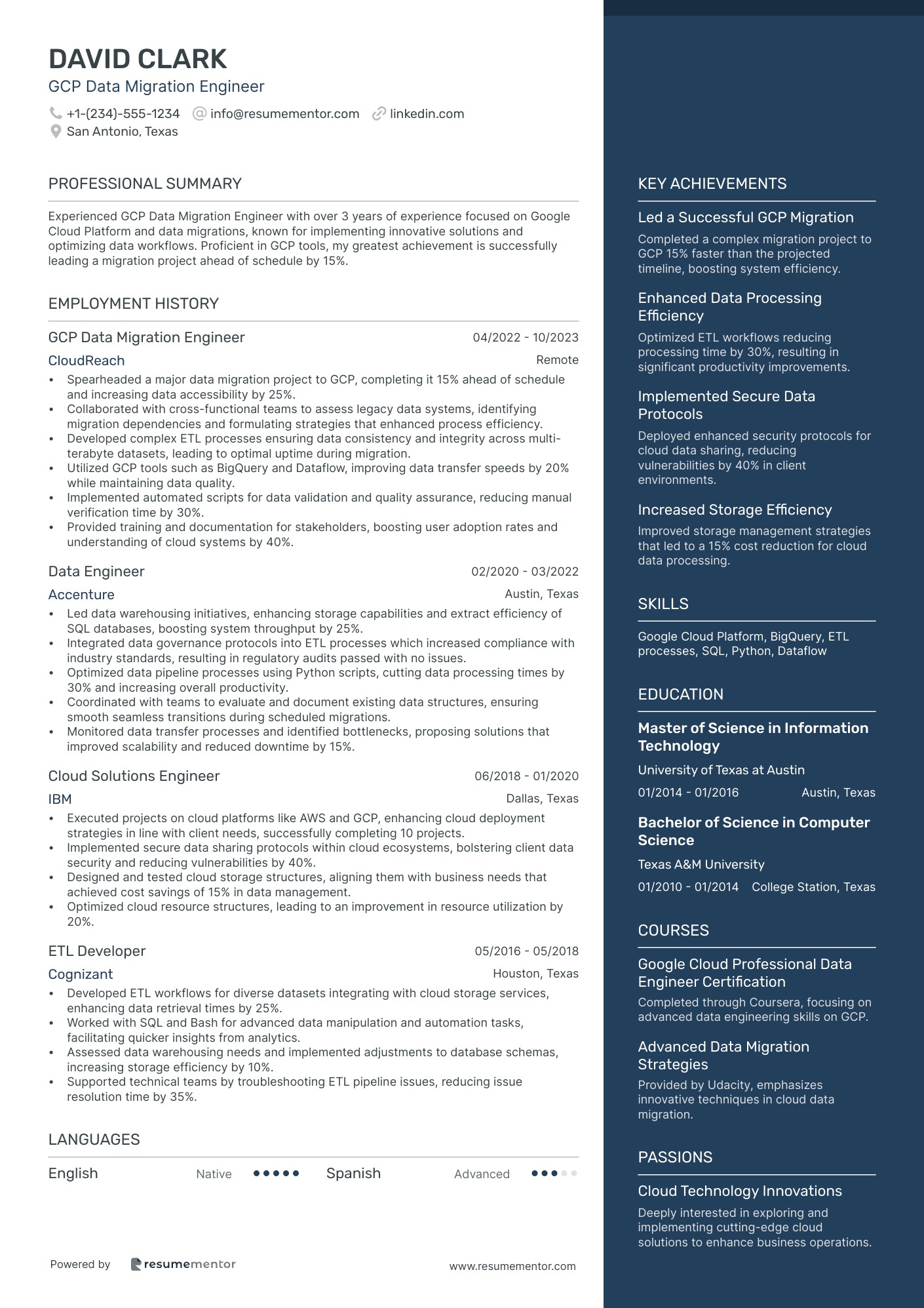

GCP Data Migration Engineer resume sample

- •Spearheaded a major data migration project to GCP, completing it 15% ahead of schedule and increasing data accessibility by 25%.

- •Collaborated with cross-functional teams to assess legacy data systems, identifying migration dependencies and formulating strategies that enhanced process efficiency.

- •Developed complex ETL processes ensuring data consistency and integrity across multi-terabyte datasets, leading to optimal uptime during migration.

- •Utilized GCP tools such as BigQuery and Dataflow, improving data transfer speeds by 20% while maintaining data quality.

- •Implemented automated scripts for data validation and quality assurance, reducing manual verification time by 30%.

- •Provided training and documentation for stakeholders, boosting user adoption rates and understanding of cloud systems by 40%.

- •Led data warehousing initiatives, enhancing storage capabilities and extract efficiency of SQL databases, boosting system throughput by 25%.

- •Integrated data governance protocols into ETL processes which increased compliance with industry standards, resulting in regulatory audits passed with no issues.

- •Optimized data pipeline processes using Python scripts, cutting data processing times by 30% and increasing overall productivity.

- •Coordinated with teams to evaluate and document existing data structures, ensuring smooth seamless transitions during scheduled migrations.

- •Monitored data transfer processes and identified bottlenecks, proposing solutions that improved scalability and reduced downtime by 15%.

- •Executed projects on cloud platforms like AWS and GCP, enhancing cloud deployment strategies in line with client needs, successfully completing 10 projects.

- •Implemented secure data sharing protocols within cloud ecosystems, bolstering client data security and reducing vulnerabilities by 40%.

- •Designed and tested cloud storage structures, aligning them with business needs that achieved cost savings of 15% in data management.

- •Optimized cloud resource structures, leading to an improvement in resource utilization by 20%.

- •Developed ETL workflows for diverse datasets integrating with cloud storage services, enhancing data retrieval times by 25%.

- •Worked with SQL and Bash for advanced data manipulation and automation tasks, facilitating quicker insights from analytics.

- •Assessed data warehousing needs and implemented adjustments to database schemas, increasing storage efficiency by 10%.

- •Supported technical teams by troubleshooting ETL pipeline issues, reducing issue resolution time by 35%.

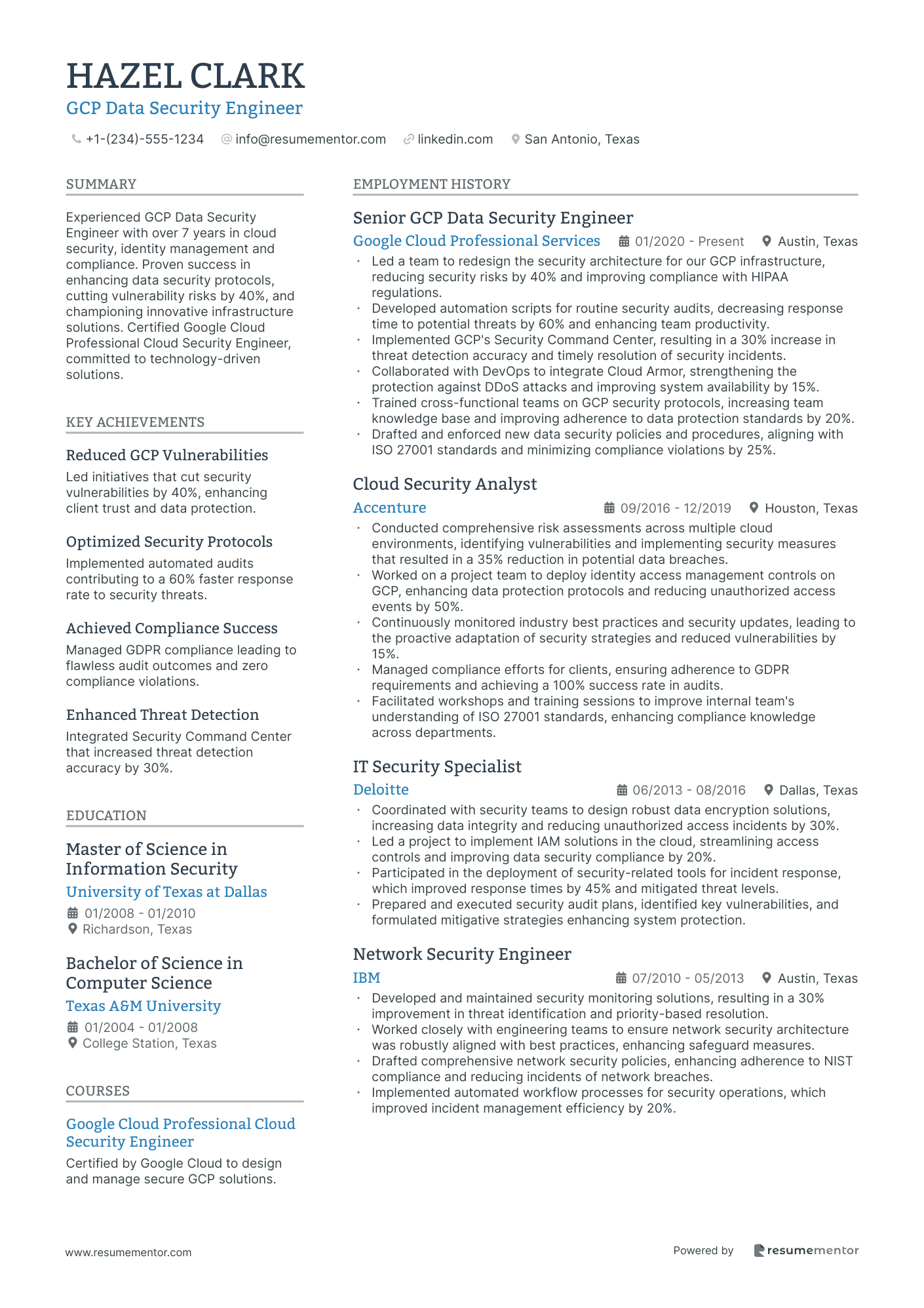

GCP Data Security Engineer resume sample

- •Led a team to redesign the security architecture for our GCP infrastructure, reducing security risks by 40% and improving compliance with HIPAA regulations.

- •Developed automation scripts for routine security audits, decreasing response time to potential threats by 60% and enhancing team productivity.

- •Implemented GCP's Security Command Center, resulting in a 30% increase in threat detection accuracy and timely resolution of security incidents.

- •Collaborated with DevOps to integrate Cloud Armor, strengthening the protection against DDoS attacks and improving system availability by 15%.

- •Trained cross-functional teams on GCP security protocols, increasing team knowledge base and improving adherence to data protection standards by 20%.

- •Drafted and enforced new data security policies and procedures, aligning with ISO 27001 standards and minimizing compliance violations by 25%.

- •Conducted comprehensive risk assessments across multiple cloud environments, identifying vulnerabilities and implementing security measures that resulted in a 35% reduction in potential data breaches.

- •Worked on a project team to deploy identity access management controls on GCP, enhancing data protection protocols and reducing unauthorized access events by 50%.

- •Continuously monitored industry best practices and security updates, leading to the proactive adaptation of security strategies and reduced vulnerabilities by 15%.

- •Managed compliance efforts for clients, ensuring adherence to GDPR requirements and achieving a 100% success rate in audits.

- •Facilitated workshops and training sessions to improve internal team's understanding of ISO 27001 standards, enhancing compliance knowledge across departments.

- •Coordinated with security teams to design robust data encryption solutions, increasing data integrity and reducing unauthorized access incidents by 30%.

- •Led a project to implement IAM solutions in the cloud, streamlining access controls and improving data security compliance by 20%.

- •Participated in the deployment of security-related tools for incident response, which improved response times by 45% and mitigated threat levels.

- •Prepared and executed security audit plans, identified key vulnerabilities, and formulated mitigative strategies enhancing system protection.

- •Developed and maintained security monitoring solutions, resulting in a 30% improvement in threat identification and priority-based resolution.

- •Worked closely with engineering teams to ensure network security architecture was robustly aligned with best practices, enhancing safeguard measures.

- •Drafted comprehensive network security policies, enhancing adherence to NIST compliance and reducing incidents of network breaches.

- •Implemented automated workflow processes for security operations, which improved incident management efficiency by 20%.

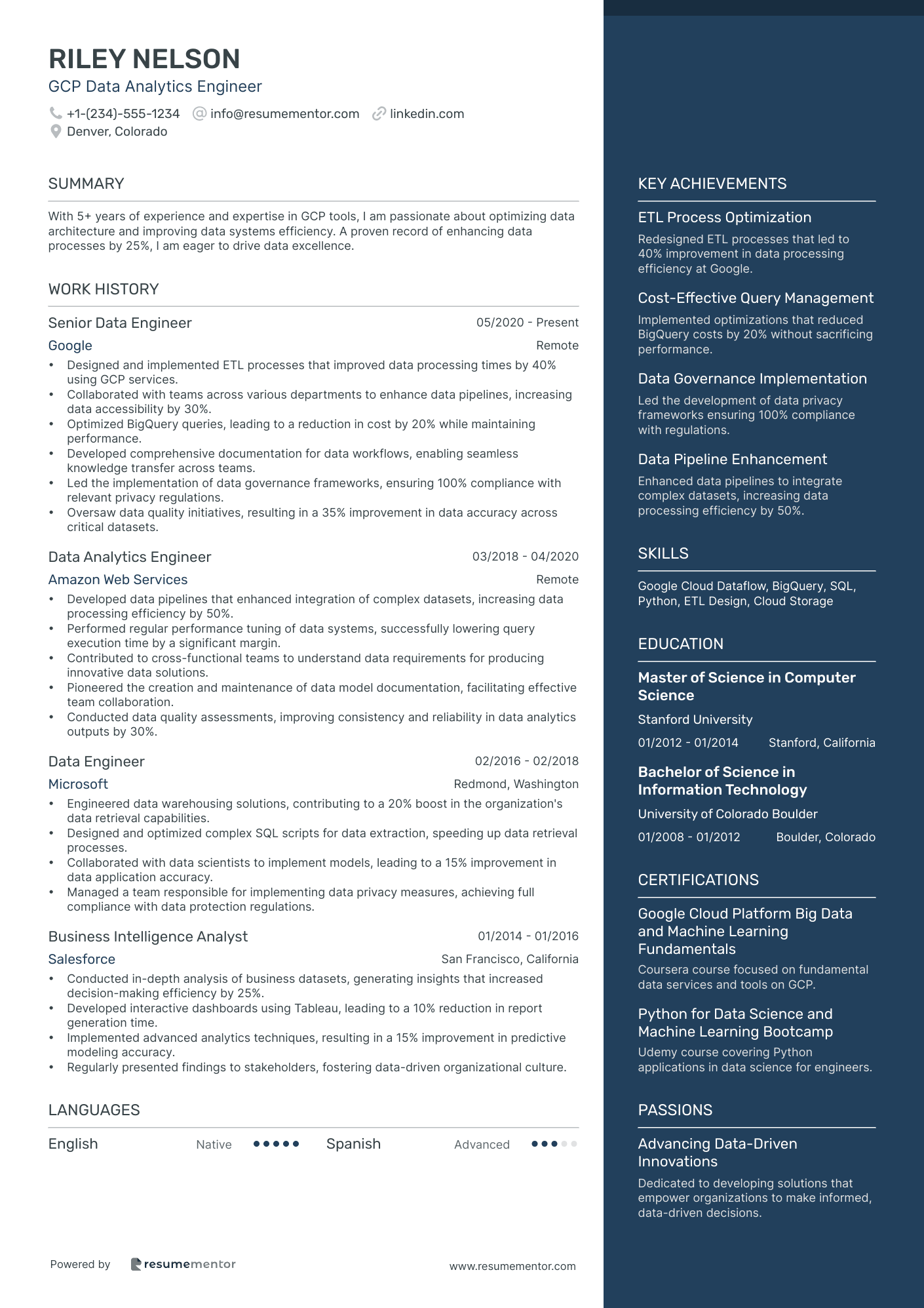

GCP Data Analytics Engineer resume sample

- •Designed and implemented ETL processes that improved data processing times by 40% using GCP services.

- •Collaborated with teams across various departments to enhance data pipelines, increasing data accessibility by 30%.

- •Optimized BigQuery queries, leading to a reduction in cost by 20% while maintaining performance.

- •Developed comprehensive documentation for data workflows, enabling seamless knowledge transfer across teams.

- •Led the implementation of data governance frameworks, ensuring 100% compliance with relevant privacy regulations.

- •Oversaw data quality initiatives, resulting in a 35% improvement in data accuracy across critical datasets.

- •Developed data pipelines that enhanced integration of complex datasets, increasing data processing efficiency by 50%.

- •Performed regular performance tuning of data systems, successfully lowering query execution time by a significant margin.

- •Contributed to cross-functional teams to understand data requirements for producing innovative data solutions.

- •Pioneered the creation and maintenance of data model documentation, facilitating effective team collaboration.

- •Conducted data quality assessments, improving consistency and reliability in data analytics outputs by 30%.

- •Engineered data warehousing solutions, contributing to a 20% boost in the organization's data retrieval capabilities.

- •Designed and optimized complex SQL scripts for data extraction, speeding up data retrieval processes.

- •Collaborated with data scientists to implement models, leading to a 15% improvement in data application accuracy.

- •Managed a team responsible for implementing data privacy measures, achieving full compliance with data protection regulations.

- •Conducted in-depth analysis of business datasets, generating insights that increased decision-making efficiency by 25%.

- •Developed interactive dashboards using Tableau, leading to a 10% reduction in report generation time.

- •Implemented advanced analytics techniques, resulting in a 15% improvement in predictive modeling accuracy.

- •Regularly presented findings to stakeholders, fostering data-driven organizational culture.

Navigating the complex world of cloud computing as a GCP data engineer is like captaining a state-of-the-art ship. Just as you guide projects through intricate data waters with your expertise in Google Cloud Platform, you must navigate the resume-writing process with precision. Capturing your skills on paper can feel hazy, yet it's crucial for landing your dream role.

Communicating your achievements can be challenging. Converting your technical prowess into clear, impactful descriptions requires more than just listing skills. It's about demonstrating your problem-solving abilities and the real impact of your projects with GCP tools. For this reason, presentation plays a significant role in making your resume stand out in a digital pile.

A resume template can streamline this process, offering a structured format. It helps you organize your career story clearly and professionally, ensuring your skills shine through. Using a template is like having a roadmap; it guides you in showcasing your journey effectively. Explore these resume templates to set sail.

With attention to detail and clarity, your resume becomes a powerful tool. It's more than just being seen—it's your connection to potential employers and the key to passing the initial screening. Think of your resume as a well-charted map, guiding you toward your next career opportunity.

Key Takeaways

- Crafting a teacher resume requires precision in showcasing your skills and achievements to navigate competitive job markets effectively.

- Utilizing a resume template can help organize your professional story, highlighting your journey in a structured and clear manner.

- Including contact information, a professional summary, technical skills, work experience with specific achievements, and education can make your resume stand out.

- Choosing the right format, such as the reverse chronological format, modern fonts, and margins, is crucial for a professional and easily readable document.

- A resume summary should concisely showcase your most significant experiences and skills, tailored to the job application to capture recruiters’ attention.

What to focus on when writing your GCP data engineer resume

As a GCP data engineer, your resume should effectively demonstrate your ability to design and manage scalable data pipelines on the Google Cloud Platform. Recruiters are eager to see your skills in transforming data, handling cloud storage, and leveraging big data analytics, as these are essential for building efficient data workflows. Highlighting your role in enhancing data infrastructure will make you an attractive candidate.

How to structure your GCP data engineer resume

- Contact Information—Include your full name, a reliable phone number, a professional email address, and your LinkedIn profile. These details are crucial for ensuring the recruiter can reach you easily. It's also a good idea to customize your LinkedIn profile URL with your name for a more professional touch.

- Professional Summary—Provide a concise overview of your experience and key skills. Mention your expertise with GCP services like BigQuery, Dataflow, and Cloud Storage. This section should capture your ability to integrate cloud solutions into business processes to drive results, setting the tone for the rest of the resume.

- Technical Skills—Clearly list the tools and technologies you are proficient in such as Python, SQL, Apache Beam, and other cloud solutions. This section should reflect your expertise in the various aspects of data engineering, highlighting your experience in data warehousing, ETL processes, and machine learning pipelines to underline your technical capability.

- Work Experience—Describe your past roles with a focus on concrete achievements such as enhancing data pipeline efficiency or reducing costs. Using specific metrics to support your claims, like improved data processing time, demonstrates the measurable impact of your work, reinforcing your ability to generate results in real-world scenarios.

- Education—Include your degree(s) and any relevant certifications such as the Google Cloud Professional Data Engineer certification. This section affirms your educational background and commitment to ongoing learning in the field, which can be particularly compelling to employers looking for dedicated and knowledgeable experts.

To further set yourself apart, you might include optional sections detailing projects where you led cloud migrations or volunteer work in data science, which can highlight your initiative and passion for the field. Now, let's discuss the format of each section in more detail to ensure your resume is both impactful and well-structured.

Which resume format to choose

As a GCP data engineer, ensuring your resume format works in your favor is crucial for catching the attention of hiring managers. The reverse chronological format is a strategic choice, as it allows potential employers to instantly grasp your most recent and relevant experience, which is particularly valuable in the fast-evolving tech landscape where current skills and achievements can set you apart.

When selecting a font, it’s important to choose one that not only enhances readability but also presents a modern and professional image. Fonts like Rubik, Lato, and Montserrat are excellent choices. They provide a clean and contemporary feel without the formality that can sometimes make a resume feel rigid or outdated.

Preserving your resume’s format is best achieved by saving it as a PDF. This format ensures that the document remains as designed, with no unexpected shifts in layout or fonts when viewed on different devices or software, which helps maintain a polished and professional appearance.

Margins can quietly influence the effectiveness of your resume. Maintaining one-inch margins on all sides not only provides necessary white space but also frames your content neatly, making it inviting and easy to navigate. This balance between text and space can significantly impact how your resume is perceived, ensuring that your skills and experiences are not overshadowed by a cluttered presentation.

Incorporating these elements thoughtfully will create a well-rounded and effective resume that highlights your unique abilities and experience as a GCP data engineer.

How to write a quantifiable resume experience section

The experience section of your GCP Data Engineer resume is a key element for distinguishing yourself in the job market. Employers want to understand how you’ve effectively applied your skills, so emphasize clear accomplishments and specific projects where you made a significant impact. Structuring your experience in reverse chronological order highlights your most recent roles, ensuring alignment with the job you're targeting. Keep your job history limited to the last 10-15 years to maintain relevancy. To tailor your resume to the job, use language and keywords from the job listing. Employ strong action words like “implemented,” “optimized,” and “engineered” to vividly describe your achievements.

- •Streamlined a scalable ETL pipeline on Google Cloud Platform, trimming data processing time by 40%.

- •Worked with cross-functional teams to deliver cloud-based data solutions, boosting data retrieval speed by 30%.

- •Honed data storage strategies, cutting cloud storage costs by 25%.

- •Guided a team of 5 data engineers, enhancing team efficiency and project delivery by 15%.

This experience section effectively illustrates your skills and results as a GCP Data Engineer, making your resume stand out. The focus on specific, quantifiable outcomes like reducing data processing time by 40% and cutting storage costs by 25% clearly demonstrates your value. Using action-oriented words such as “streamlined” and “honed” underscores your initiative and technical proficiency, which are essential for excelling in data engineering roles.

Arranging your experiences in reverse chronological order brings attention to your most current and relevant work, meeting employer expectations. This approach, combined with tailoring content to reflect the job description using specific technologies and improvements, shows your clear understanding of the role’s requirements. Additionally, by highlighting leadership and collaboration abilities, you emphasize your capacity for teamwork and successful project management, further enhancing your profile.

Responsibility-Focused resume experience section

A responsibility-focused GCP Data Engineer resume experience section should effectively convey your expertise and the impact of your work. Begin by highlighting the key tasks and achievements that showcase your skills with GCP services and data solutions. Illustrate your role within teams or projects, emphasizing outcomes like increased performance or cost savings to demonstrate your value. Use action verbs and bullet points for clarity, ensuring that each detail flows seamlessly into the next to tell a cohesive story of your contributions.

Each bullet point should succinctly describe a specific achievement, connecting your efforts to tangible results. This approach not only makes the information easy to read but also highlights how your technical skills have led to meaningful improvements. Present your experiences in a way that shows a clear progression of impact and responsibility. Here is how it might look structured in JSON format:

GCP Data Engineer

InnovateTech Solutions

2020-2023

- Designed and implemented data pipelines with Google Cloud Dataflow, which reduced data processing times by 30%.

- Collaborated with cross-functional teams to construct cloud-native applications, enhancing data accessibility while cutting costs by 15%.

- Improved query performance by 40% and increased data retrieval speed through optimization of BigQuery data warehouse structures.

- Enhanced data accuracy and reliability by 20% by automating data validation processes using Google Cloud Functions.

Technology-Focused resume experience section

A technology-focused GCP Data Engineer resume experience section should clearly illustrate your impact and accomplishments in each role you've held. Start by listing your relevant positions, including freelance or consulting work related to Google Cloud Platform. Detail how you enhanced the value of your team or project, utilizing specific achievements to draw a clear picture of your contributions. Strong action words paired with quantifiable results can effectively showcase your effectiveness and impact.

As an expert in Google Cloud tools and technologies, your resume should emphasize your skills in designing and implementing scalable data architectures. Weave in descriptions of specific projects where GCP services like BigQuery or Dataflow were used to tackle challenges, illustrating your hands-on experience. Tailor your language to seamlessly reflect the job you’re applying for, ensuring each sentence builds upon the last. Prioritize clarity and relevance to make your resume an impressive reflection of your professional journey.

Senior GCP Data Engineer

Tech Innovations Co.

January 2020 - Present

- Implemented ETL pipelines using Google Dataflow, cutting data processing time by 30%.

- Optimized storage solutions with BigQuery, achieving a 15% annual cost reduction.

- Collaborated with cross-functional teams to deploy machine learning models on AI Platform.

- Created data visualization dashboards using Google Data Studio to enhance business insights.

Innovation-Focused resume experience section

A GCP innovation-focused data engineer resume experience section should effectively demonstrate how you utilize Google's cloud tools to drive innovative solutions. Begin by showcasing the outcomes of your work, highlighting the methods and technologies you've used to achieve these results. Emphasize your proactive approach by using action verbs that illustrate your ability to tackle challenges head-on. Highlight achievements that show your knack for improving or reinventing processes to enhance efficiency.

In the example section, start by providing the time frame of your experience to set the stage. Clearly define your role and the specific impact you had on projects. Instead of merely listing tasks, focus on the processes you optimized and the solutions you developed, emphasizing their significance. Use metrics or results to offer tangible insights into how your innovations positively impacted the organization. This approach not only underscores your technical expertise and creativity but also cements your reputation as a thought leader in your field.

Data Engineer

Tech Innovators Inc.

June 2020 - August 2022

- Created an automated ETL process on GCP, cutting data processing time by 30%.

- Implemented a machine learning model for predictive analytics, boosting forecast accuracy by 20%.

- Developed a real-time data pipeline, enhancing data availability and decision-making speed, leading to a 15% increase in operational efficiency.

- Led a team in migrating legacy systems to GCP, slashing infrastructure costs by 40%.

Growth-Focused resume experience section

A growth-focused GCP Data Engineer resume experience section should seamlessly highlight your relevant achievements and skills. Start by selecting experiences that showcase your abilities in using GCP to drive tangible results. Describe your key contributions with specific, measurable outcomes, incorporating metrics to vividly illustrate your impact. The language should be straightforward, contributing to a smooth flow that engages readers.

Organize your accomplishments into logical, cohesive bullet points that build on one another. Focus on various elements of your role, from your problem-solving skills to teamwork and technical expertise. Each bullet should clearly demonstrate the results of your actions. Ensure this section is tailored to the specific job you're applying for, highlighting experiences that align with your career growth goals and resonate with the job's requirements.

GCP Data Engineer

Tech Innovations Ltd

June 2020 - Present

- Implemented a data pipeline using GCP services, which reduced processing time by 40% and improved data access for stakeholders.

- Optimized cloud storage solutions, successfully lowering costs by 25% while maintaining data integrity.

- Effectively managed ETL processes to handle and analyze over 1TB of data daily, supporting real-time decision-making.

- Collaborated with cross-functional teams to design and deploy cloud infrastructure, ensuring alignment with company goals.

Write your GCP data engineer resume summary section

A skills-focused GCP Data Engineer resume should begin with a well-crafted summary. This section gives a quick look at your skills and achievements, acting as the gateway to your resume. Since it's the first thing a recruiter sees, it should instantly make a great impression. Use this space to highlight your most important experiences and accomplishments. Tailoring your summary to the job you're applying for is key to catching attention. For a GCP Data Engineer, include your technical skills and significant projects to show your expertise clearly. Consider this attention-grabbing example:

This example clearly highlights your essential experiences and skills, making clear what you bring to the role. It describes you by mixing technical prowess with teamwork abilities, underlining your impact on system improvements.

It's vital to grasp the differences among a summary, resume objective, resume profile, and a summary of qualifications to choose the right approach. While a resume summary emphasizes your career achievements and skills, a resume objective is suited for those new to the field, focusing on future aspirations. Meanwhile, a resume profile combines elements of both, and a summary of qualifications lists key strengths in bullets.

Selecting the right format rests on your career stage and objectives. An effective summary is not only concise but also sharply relevant to the job. When you’re new to the field, a resume objective can powerfully express your eagerness to learn and grow. Wherever possible, quantify achievements to make your story more compelling. Personalizing your resume for each application ensures you stand out. Remember, every word should reflect your professional brand.

Listing your GCP data engineer skills on your resume

A skills-focused GCP data engineer resume should clearly showcase your abilities, strengths, and technical expertise. You can list your skills separately or weave them into your experience and summary sections, making sure they align with your overall narrative. By highlighting strengths, you demonstrate your ability to collaborate effectively and solve complex problems. Meanwhile, hard skills, which are specific and measurable, such as proficiency in coding or software tools, are equally vital. These skills and strengths together act as keywords that attract both resume scanners and potential employers.

Creating a well-structured skills section is crucial for clarity. Here’s a straightforward format to consider for effectively listing your key competencies:

This example delivers clarity by listing specific and relevant skills. It presents a quick overview of your qualifications as a GCP Data Engineer, ensuring that hiring managers can easily recognize your expertise. Clearly highlighting these skills demonstrates your readiness for the job and positions you as a top candidate.

Best hard skills to feature on your GCP data engineer resume

Focusing on hard skills is crucial for a GCP data engineer, as these emphasize the technical expertise needed to manage data on the Google Cloud Platform. These abilities communicate your aptitude for handling complex data projects effectively.

Hard Skills

- Google Cloud Platform (GCP)

- BigQuery

- Cloud Dataflow

- Cloud Pub/Sub

- Data Modeling

- Python

- SQL

- Kubernetes

- Terraform

- Cloud Functions

- Data Warehouse

- Machine Learning

- Apache Beam

- Cloud Storage

- ETL Processes

Best soft skills to feature on your GCP data engineer resume

Alongside hard skills, featuring soft skills is essential because they highlight your interpersonal abilities and your approach to tackling challenges. These skills reflect your communication, leadership, and problem-solving strengths.

Soft Skills

- Communication

- Problem-Solving

- Collaboration

- Time Management

- Adaptability

- Analytical Thinking

- Attention to Detail

- Creativity

- Leadership

- Emotional Intelligence

- Conflict Resolution

- Project Management

- Critical Thinking

- Teamwork

- Organizational Skills

How to include your education on your resume

An education section is crucial for your GCP Data Engineer resume. It helps showcase your background knowledge and skills relevant to the position. Tailor this section specifically for the job by including only relevant education. Avoid listing unrelated education that does not contribute to your qualifications.

Including your GPA can be beneficial if it's impressive. Mention it clearly like "GPA: 3.8/4.0". If you graduated with honors, such as cum laude, add it beside your degree. List your degree concisely, stating the title, institution, and graduation date.

The second example is excellent for a GCP Data Engineer as it highlights a Master's in Data Engineering—a relevant field. Mentioning 'cum laude' illustrates academic excellence. The high GPA also adds weight to the qualifications. This section is well-organized, clearly showing key accomplishments that align with the job.

How to include GCP data engineer certificates on your resume

Including a certificates section in your GCP data engineer resume is crucial. List the name of the certificate first so it grabs attention. Include the date you received it to show your recent updates and commitment. Add the issuing organization to verify its credibility.

Certificates can also be featured in the header to catch the eye immediately. For example, "Google Cloud Certified Professional Data Engineer | AWS Certified Solutions Architect."

A good example of a standalone certificates section looks like this:

This example is strong because it includes certificates directly relevant to a GCP data engineer role. It lists the name of each certificate, includes the issuing organization for validation, and shows your ongoing dedication to improving your skills. This not only makes your resume authentic but also aligns your expertise with the job requirements.

Extra sections to include in your GCP data engineer resume

Crafting a robust resume that stands out is vital for any GCP Data Engineer aiming for career advancement. Including diverse sections can provide a well-rounded view of your skills and personality, enhancing your desirability to potential employers.

Language section — List languages you know fluently or are learning. This demonstrates cultural versatility and potential for international projects.

Hobbies and interests section — Share your hobbies to show personal traits like creativity or teamwork. This helps employers see you as a well-rounded individual.

Volunteer work section — Detail your volunteer experiences and roles. This emphasizes your community engagement and ethical values.

Books section — Include a list of relevant technical books or industry-related literature you’ve read. This shows your dedication to continuous learning and staying current in your field.

In Conclusion

In conclusion, crafting an effective resume as a GCP Data Engineer is essential for standing out in a competitive job market. By carefully highlighting your skills, achievements, and professional journey, your resume becomes a narrative of your capabilities and readiness for the role. Consider using a well-structured template that emphasizes both technical prowess and soft skills, ensuring that your strengths are front and center. Prioritize quantifiable achievements, as these serve as concrete evidence of your contributions and impact. Tailoring your resume to each job application is crucial, using the specific language and requirements outlined in the job description to align your experience with the employer's needs. Including a mix of hard and soft skills creates a balanced portrait of a candidate capable of both technical execution and effective collaboration. Additional sections, such as certifications and relevant education, enhance your profile by showcasing your commitment to continuous learning and professional growth. Through meticulous presentation and attention to detail, your resume can serve as a compelling introduction to potential employers, effectively charting your journey toward future career opportunities within the realm of GCP data engineering.

Related Articles

Continue Reading

Check more recommended readings to get the job of your dreams.

Resume

Resources

Tools

© 2026. All rights reserved.

Made with love by people who care.